Time of Flight is your gateway to the Metaverse

A key to the Metaverse is being able to accurately reconstruct our 3D world to virtualise or augment. Electronic Specifier’s Paige West spoke with Tony Zarola, Senior Director, Time of Flight Technology Group, Analog Devices about an emerging 3D sensing and imaging technology.

Many depth-based applications require high-resolution depth images. The current approaches rely heavily on super-resolution/up-sampling techniques (algorithmic pipeline), which can be slow and require lots of training data tailored to the application at hand.

Time of Flight (ToF) is a 3D sensing and imaging technology that has found numerous applications in areas such as autonomous vehicles, virtual and augmented reality, feature identification, and object dimensioning.

“3D ToF [has] really started to open up the imagination … especially with the increased resolution and accuracy that can be offered,” notes Zarola.

ToF cameras acquire depth images by measuring the time it takes the light to travel from a light source to objects in the scene and back to the pixel array.

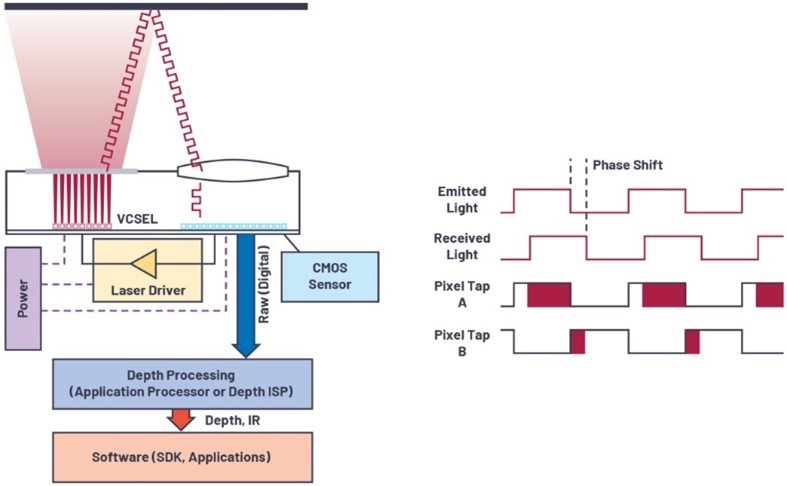

A ToF camera is comprised of several elements (see Figure 1) including:

- a light source – such as a vertical cavity surface emitting laser (VCSEL) or edge-emitting laser diode – that emits light in the near infrared domain. The most commonly used wavelengths are 850nm and 940nm. The light source is usually a diffuse source (flood illumination) that emits a beam of light with a certain divergence (aka, field of illumination or FOI) to illuminate the scene in front of the camera.

- a laser driver that modulates the intensity of the light emitted by the light source.

- a sensor with a pixel array that collects the returning light from the scene and outputs values for each pixel.

- a lens that focuses the returning light on the sensor array.

- a band-pass filter co-located with the lens that filters out light outside of a narrow bandwidth around the light source wavelength.

- a processing algorithm that converts output raw frames from the sensor into depth images or point clouds.

Figure 1. Overview of continuous wave time of flight sensor technology

There are lots of applications looking to create a 3D representation or use 3D in order to understand the world around them. 3D avatar generation is one such application that is of particular importance to the Metaverse.

ToF allows the entire image to be recorded at once. There is no need for line-by-line scanning or for relative motion between the sensor and the observed objects. ToF is often classified as LIDAR (light detection and ranging), but it is a flash LIDAR-based approach, rather than a scanning LIDAR.

There are basically two different ways to measure the time of flight of the light pulse with ToF: direct measurement (dToF) of the photon travel time from a pulsed laser source to the sensor array and indirect measurement (iToF) which derives depth information by measuring the phase difference of a source modulated continuous wave (CW) laser signal to the received (reflected) signal.

Both modes of operation have advantages and disadvantages. Pulsed mode is more robust to ambient light, and therefore more conductive to outdoor applications because this technology often relies on high energy light pulses emitted in very short bursts during a short integration window. Whereas CW mode may be easier to implement because the light source does not have to be extremely short, with fast rising/falling edges. However, if precision requirements become more stringent, higher frequency modulation signals will become necessary and may be difficult to implement.

The existing pixel sizes result in high chip resolutions, which enable not just distance measurements but also object and gesture recognition. The distances to be measured range from a few cm (<10 cm) to several meters (<5 m).

Unfortunately, not all objects can be detected to the same degree. The condition, the reflectance, and the speed of the object affect the measurement result. The measurement result can also be falsified by environmental factors such as fog or strong sunlight. Ambient light suppression helps with the latter.

Analog Devices offer complete 3D ToF systems to support the rapid implementation of 3D ToF solutions.

Earlier this year, the company announced the industry’s first high-resolution, industrial quality, indirect Time of Flight (iToF) module for 3D depth sensing and vision systems.

Enabling cameras and sensors to perceive 3D space in one megapixel resolution, the new ADTF3175 module offers highly accurate +/-3mm iToF technology over the full depth range (up to 4m) available for machine vision applications ranging from industrial automation to logistics, healthcare, and augmented reality.

“Machine vision needs to make the leap to perceiving smaller, more subtle objects faster in industrial environments,” said Zarola. “The ADTF3175’s unmatched resolution and accuracy allows vision and sensing systems – including industrial robots – to take on more precision-oriented tasks by enabling them to better understand the space they’re operating in and ultimately improve productivity. Bringing this to market helps bridge a major gap and accelerates deployment of the next generation of automation solutions and critical logistics systems.”

Analog Devices also announced a collaboration with Microsoft to mass produce 3D imaging products and solutions. Leveraging Microsoft’s 3D ToF sensor technology, customers can easily create high-performance 3D applications that bring higher degrees of depth accuracy and work regardless of the environmental conditions in the scene. ADI’s technical expertise will build upon Microsoft Azure Kinect technology to deliver ToF solutions to a much broader audience in areas such as Industry 4.0, automotive, gaming, augmented reality, computational photography, and videography.

Continuous wave time of flight cameras are a powerful solution offering high depth precision for applications requiring high quality 3D information. The Metaverse market is still undefined, and its use cases are vast and varied and will continue to grow. 3D sensing and imaging technology will be needed to deliver a truly immersive Metaverse experience.

“There’s a lot of room for growth,” said Zarola. “With the Metaverse usage, it’s really in the ability to engage with 3D environments without feeling like you’re wearing a headset.

“Improving the quality of the user experience is going to be part of the progression to making the Metaverse more ubiquitous.”