The complexity of AI training algorithms is increasing rapidly, with the compute required for new algorithms doubling approximately every four months. To keep up with this growth, AI application hardware needs to be scalable, supporting longevity as new algorithms emerge, while maintaining low operational costs. It also needs to handle increasingly complex models near the end-user.

IDTechEx, drawing from its ‘AI Chips: 2023–2033’ and ‘AI Chips for Edge Applications 2024–2034: Artificial Intelligence at the Edge’ reports, forecasts that AI growth, in both training and inference in the Cloud and at the Edge, will continue unabated over the next decade. This growth is driven by the increasing automation and interconnectivity of our world and its devices.

Understanding AI chips

The evolution of hardware to accelerate specific computations, such as offloading complex operations from the main processor, has been a long-standing practice in computing. Early computers paired CPUs with Floating-Point Units (FPUs) for efficient mathematical operations. As technology advanced, new hardware emerged to address evolving workloads, like GPUs for computer graphics.

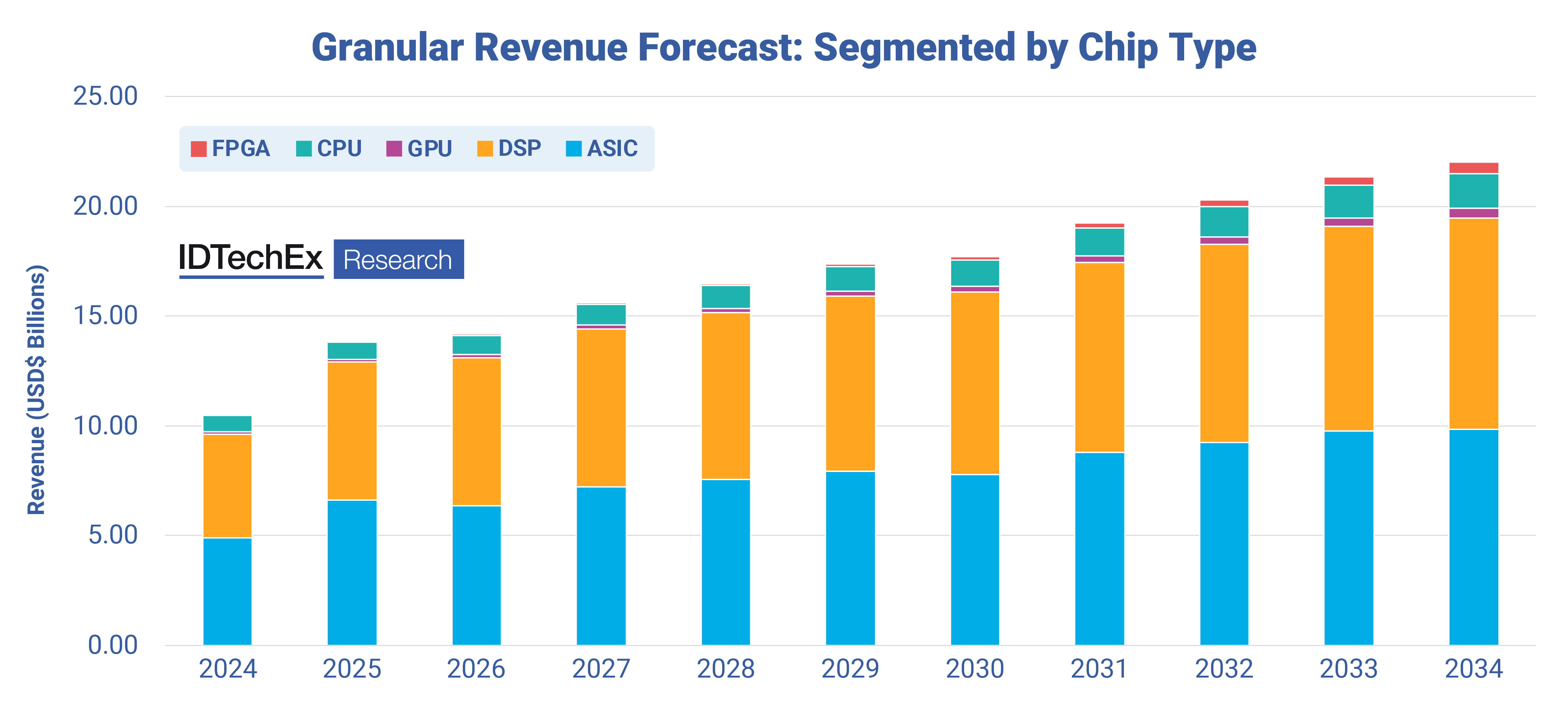

Revenue generated by different chip architectures for Edge devices, 2024 to 2034. Source: ‘AI Chips for Edge Applications 2024–2034: Artificial Intelligence at the Edge’, IDTechEx.

Revenue generated by different chip architectures for Edge devices, 2024 to 2034. Source: ‘AI Chips for Edge Applications 2024–2034: Artificial Intelligence at the Edge’, IDTechEx.

Similarly, machine learning’s rise necessitated a new type of accelerator for efficient handling of machine learning workloads. Machine learning involves creating programs that use data to make predictions and then optimise the model for better data alignment, requiring two steps: training and inference.

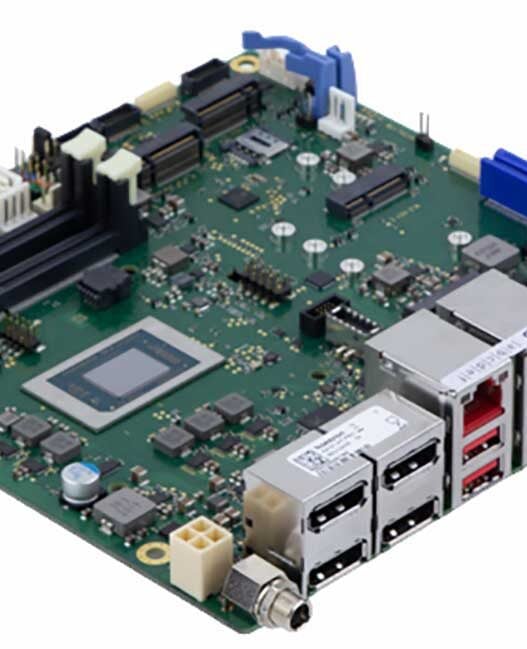

Training involves feeding data into a model and adjusting its weights, while inference involves executing the trained algorithm with new data. Training, more computationally intense, typically occurs in Cloud computing environments, utilising chips capable of parallel processing for efficiency. Inference, however, can occur in both Cloud and Edge environments. The discussed reports detail how CPU, GPU, FPGA, and ASIC architectures handle machine learning workloads.

In the Cloud, GPUs dominate, particularly for AI training, with Nvidia’s strong presence. For Edge AI, ASICs are preferred for their problem-specific designs. DSPs also play a significant role in AI coprocessing at the Edge, as seen with Qualcomm’s Hexagon Tensor Processor.

AI’s influence on semiconductor manufacturing

AI training chips are usually produced using advanced nodes in semiconductor manufacturing, with Intel, Samsung, and TSMC leading in the production of 5nm node chips, and TSMC advancing in 3nm chip orders. The global semiconductor production is concentrated in the APAC region, particularly Taiwan.

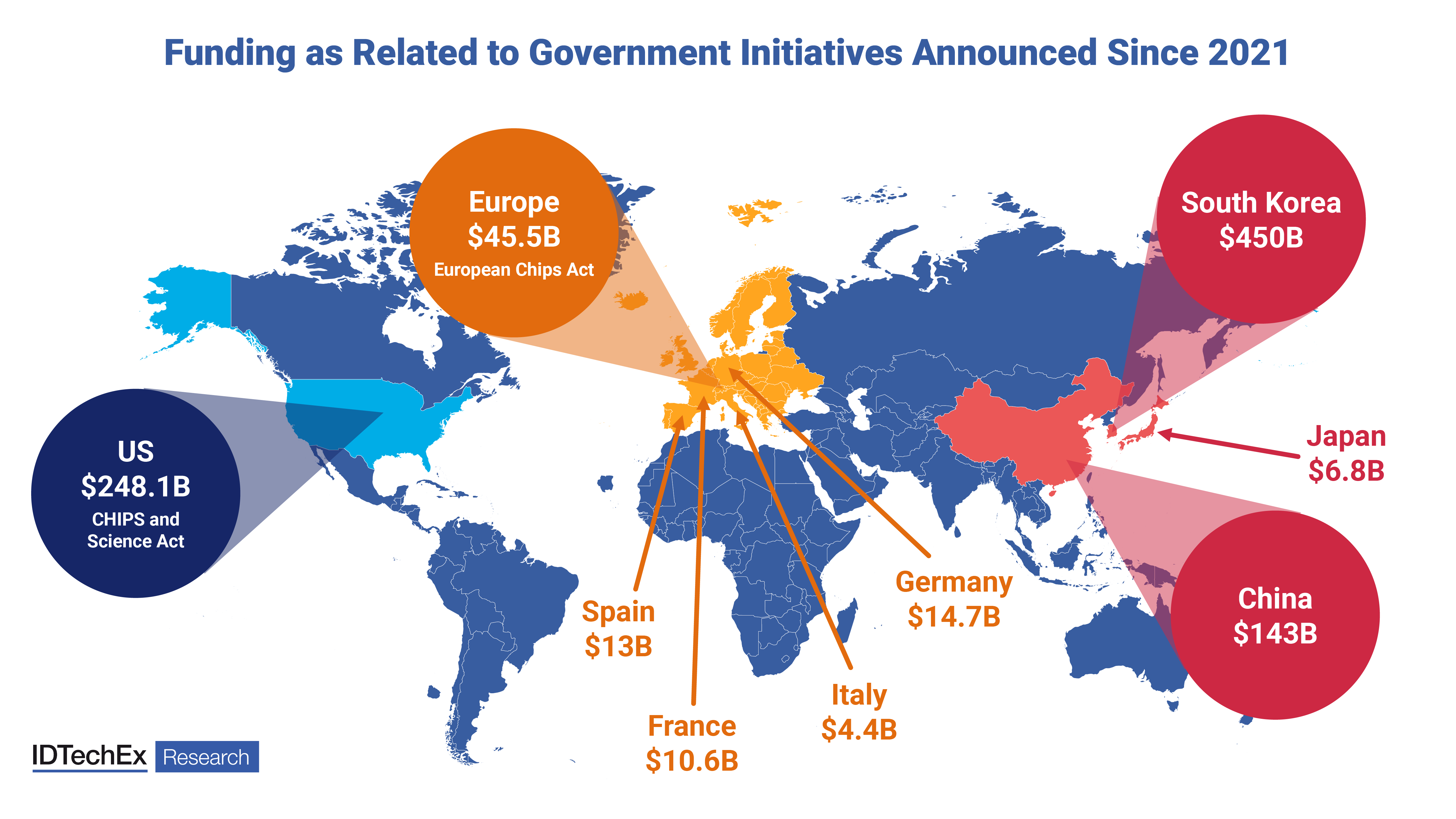

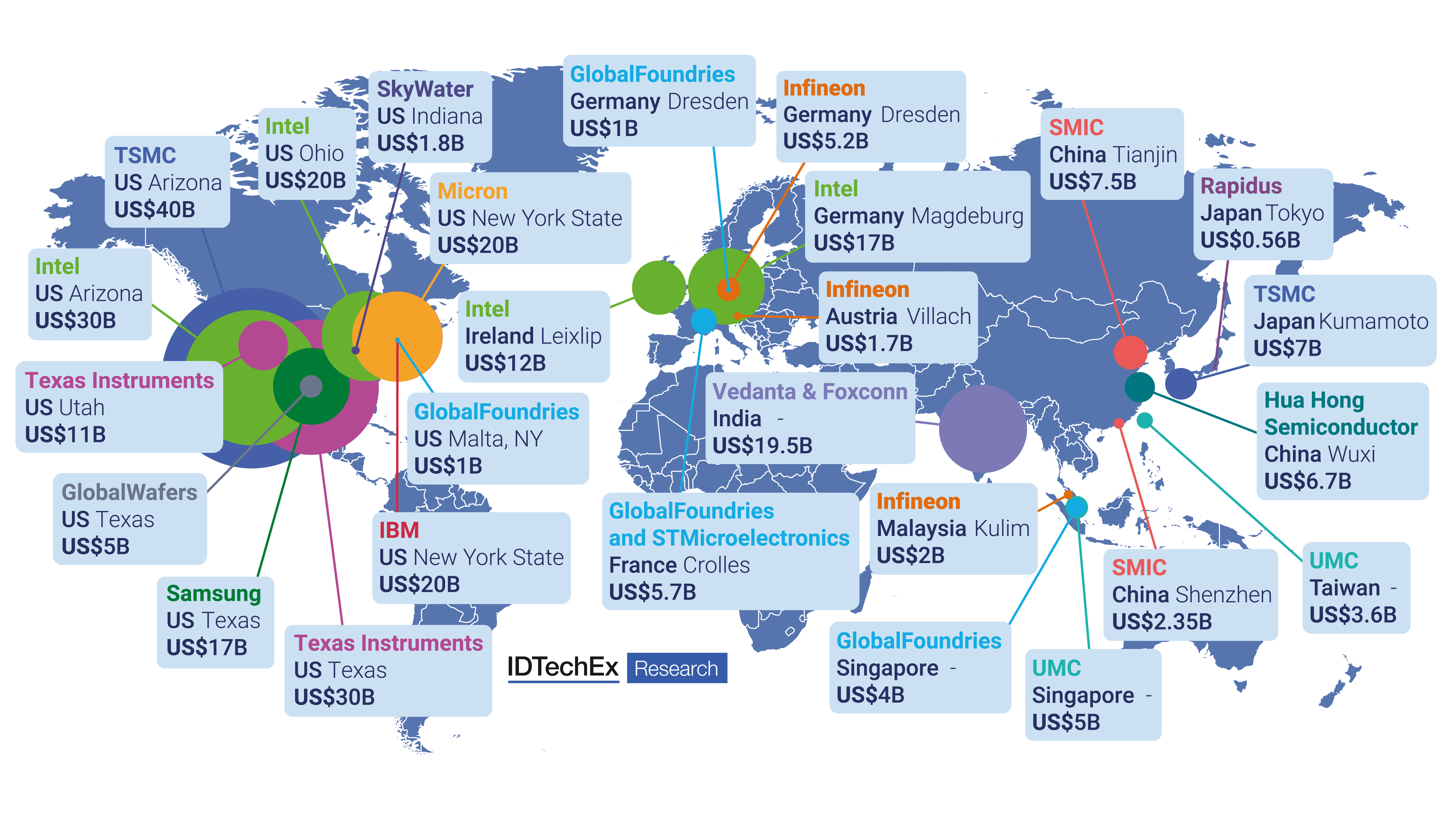

Funding as related to government initiatives announced since 2021. Source: IDTechEx

Funding as related to government initiatives announced since 2021. Source: IDTechEx

The 2020 global chip shortage, resulting from various factors, highlighted risks in this concentrated supply chain. Subsequently, major stakeholders like the US, EU, South Korea, Japan, and China have sought to reduce manufacturing deficit risks, evidenced by government funding and incentives for private investment in semiconductor production. AI, as an advanced technology, is central to these initiatives, driving the development of AI hardware and software.

Shown here are the proposed and confirmed investments into semiconductor facilities by manufacturers since 2021. Where currencies have been listed in anything but US$, these have been converted to US$ as of May 2023. Source: ‘AI Chips: 2023–2033’, IDTechEx

Shown here are the proposed and confirmed investments into semiconductor facilities by manufacturers since 2021. Where currencies have been listed in anything but US$, these have been converted to US$ as of May 2023. Source: ‘AI Chips: 2023–2033’, IDTechEx

Forecast for AI chip growth

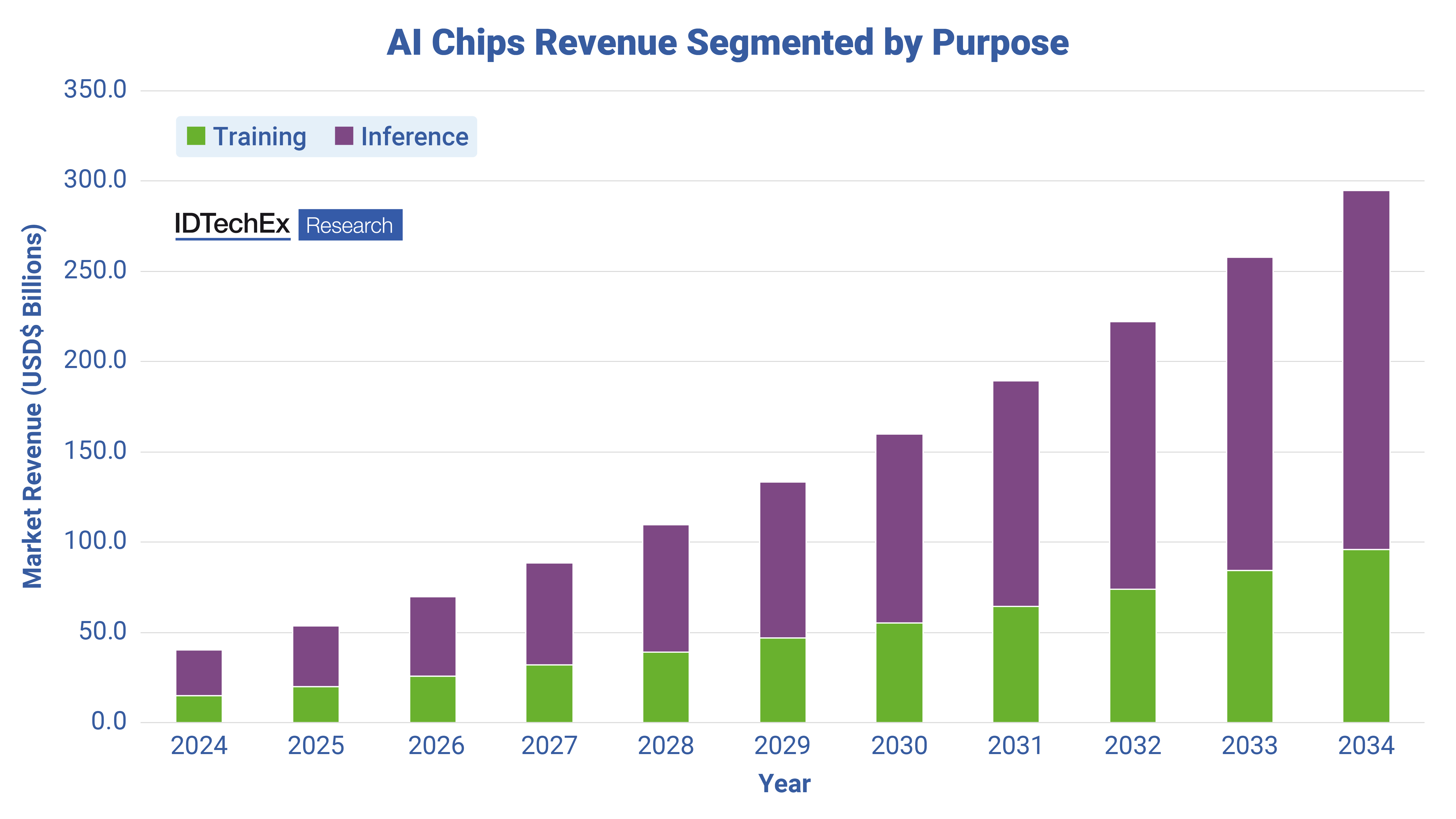

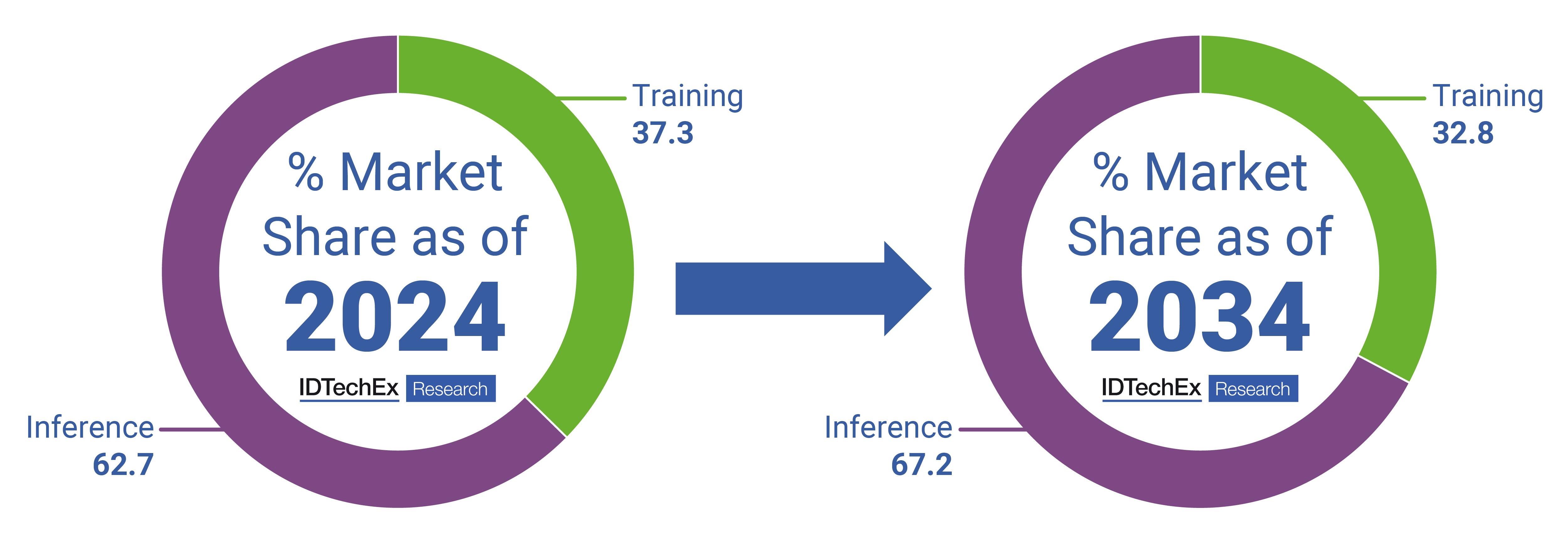

The AI chip market is expected to nearly reach $300 billion by 2034, with a 22% compound annual growth rate from 2024 to 2034. This growth includes chips for machine learning workloads at the network edge, in telecom edge, and cloud data centres. As of 2024, inference chips constitute 63% of this revenue, with an increasing share projected by 2034.

AI chips revenue segmented by purpose. Source: IDTechEx

AI chips revenue segmented by purpose. Source: IDTechEx

Significant growth is anticipated at the Edge and telecom Edge, as AI capabilities move closer to end-users. IT & Telecoms are expected to lead AI chip usage, followed by Banking, Financial Services & Insurance, and Consumer Electronics. The Consumer Electronics sector is predicted to generate substantial revenue at the edge, with the expansion of AI into home consumer products. Further details on industry verticals and market segmentation for AI chips are available in the ‘AI Chips: 2023–2033’ and ‘AI Chips for Edge Applications 2024–2034: Artificial Intelligence at the Edge’ reports.

Revenue generated by AI chips is set to grow at a CAGR of 22% over the next ten years, up to 2034. Of the revenue generated at this time, AI chips for inference purposes will dominate over those used for AI training, as AI migrates more thoroughly to deployment at the edge of the network. Source: IDTechEx

Revenue generated by AI chips is set to grow at a CAGR of 22% over the next ten years, up to 2034. Of the revenue generated at this time, AI chips for inference purposes will dominate over those used for AI training, as AI migrates more thoroughly to deployment at the edge of the network. Source: IDTechEx

These reports provide comprehensive forecasts and analyses, including revenue forecasts, supply chain costs, comparative costs between leading and trailing edge node chips, and customisable templates for customer use.