This approach, which is often referred to as neuromorphic computing, aims to mimic the brain’s efficient and parallel information processing capabilities. A notable milestone in this journey is IBM’s NorthPole chip, which sets the stage for future explorations and developments in this field.

According to A. Mehonic and A.J. Kenyon from the UCL’s Department of Electronic and Electrical Engineering, the future of brain-inspired computing will be a move towards systems that can process unstructured data, perform image recognition, classify noisy datasets, and underpin novel learning and inference systems. These neuromorphic systems will likely offer significant energy savings compared to conventional computing platforms, and while not aimed at replacing digital computation, they will complement precision calculations and could integrate with quantum computing in the future.

“One of the reasons neuromorphic computing is starting to become interesting at the moment is because our current computer systems are hitting a brick wall, in terms of the amount of data they can process for a reasonable amount of energy … large language models cost millions of dollars to train and use huge amounts of CO2, and they’re not very efficient at processing the sort of information and data that the brain processes,” Tony Kenyon, Vice Dean (strategy), UCL tells Electronic Specifier.

The evolution of AI hardware

The cornerstone of the AI hardware revolution lies in energy efficiency and miniaturisation.

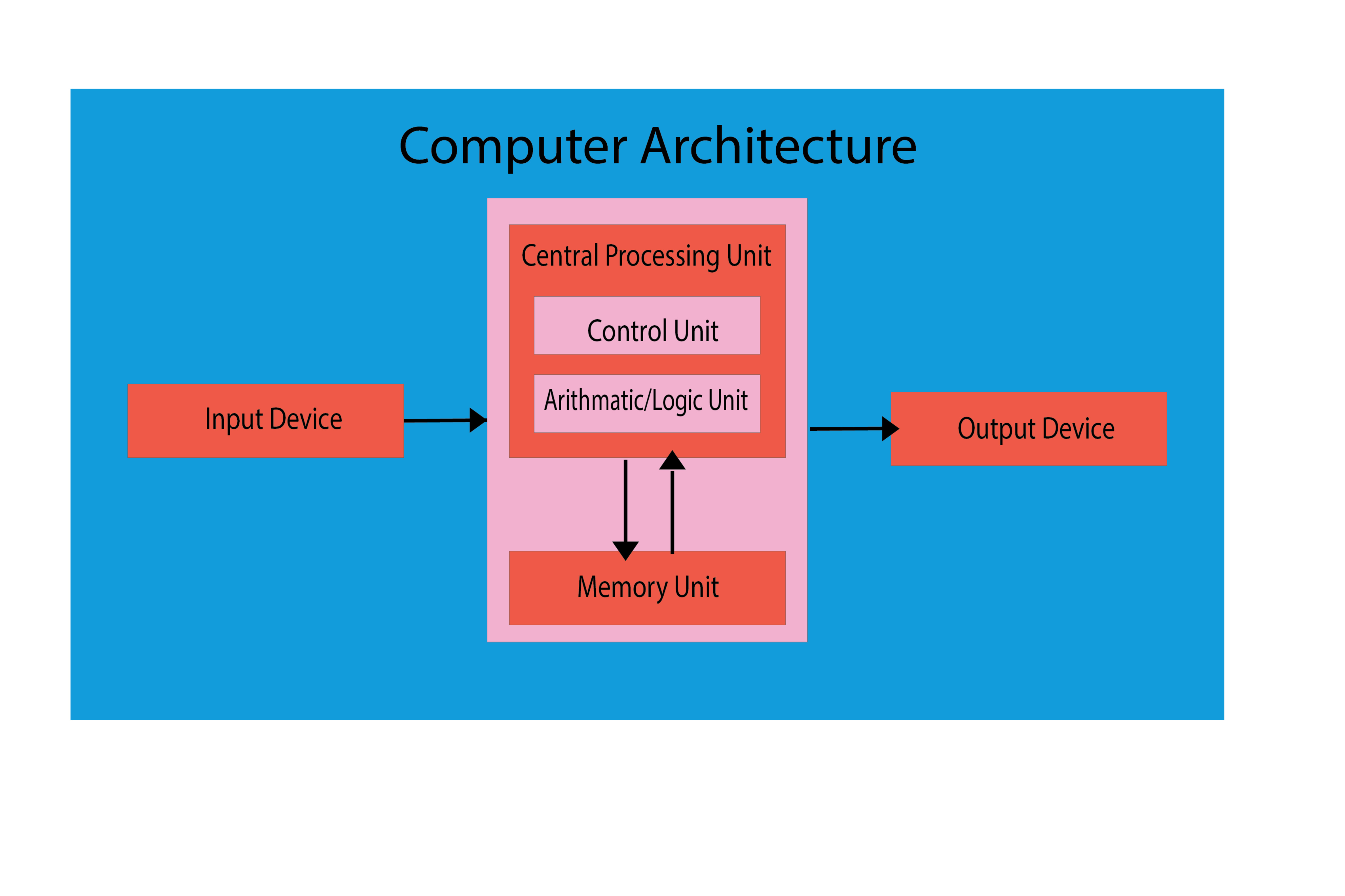

“The brain processes very complex signals that are very noisy and it does it very power efficiently – a million times more than our best computers, and there are really good reasons why that is the case. One of the fundamental reasons why computers are very inefficient is that they spend most of their time moving data backwards and forwards between memory and processing unit … It takes much more energy to move data than it does to process it. The brain doesn’t do that. The brain has structures that both process and store information.”

This is inefficient process is typically referred to as the Von Neumann Bottleneck, whereby there is limited bandwidth between a computer’s central processing unit and its memory which can lead to a bottleneck – a point in a system where the flow or processing of data is limited, causing a slowdown of constraint in performance – in data transfer and processing speed.

Kenyon notes: “Our current computer systems are hitting a bit of a brick wall,” underscoring the need for neuromorphic systems that can manage vast amounts of data with minimal energy consumption. Kenyon further elucidates the brain’s superior capability to handle “imprecise, fuzzy data,” highlighting the potential of neuromorphic computing to change AI hardware by significantly reducing power requirements and enhancing processing efficiency.

The pursuit of future brain-inspired AI hardware is geared towards transcending the boundaries of current energy efficiency. Progress in transistor technology is leading us beyond the conventional 12-nm nodes, venturing into the realms of quantum materials and substances like graphene or diamond. These advancements are both efficient and are also reshaping the physical landscape of AI hardware, driving towards greater miniaturisation.

Mimicking the brain’s network

Parallel to these developments is the ambition to imitate the brain’s dense network of neurons and synapses. Future AI chips are envisioned to incorporate even more interconnected and parallel structures. This enhancement is key to unlocking faster and more complex computational tasks, akin to the rapid and multifaceted processing observed in the human brain.

“As you create memories, you create more neural connections through activating more and more synapses. Structure and function are kind of inextricably linked – which is not the case in computing systems. So, there is potentially a whole spectrum of ways of thinking about applications where we take inspiration from the brain,” Kenyon asserts.

Adaptability and learning

A defining trait of the human brain is its remarkable ability to learn and adapt – this aspect is pivotal for the future of AI hardware. The integration of advanced forms of plasticity and learning algorithms in AI chips is anticipated, paving the way for self-adapting hardware. Such chips would be capable of adjusting to new tasks and environments without the need for external reprogramming, echoing the adaptability inherent in biological systems.

Explaining technology’s ability to mirror the brain’s processing abilities, Kenyon explains that neuromorphic computing has traditionally used standard electronic components, such as transistors, in novel ways to mimic the brain’s functionality. However, creating a realistic model of a neuron requires numerous transistors, lacking the full complexity needed. Enter memristors, innovative devices capable of acting like neurons and synapses, supporting the brain’s method of local learning through changes in synaptic strength. This learning is influenced by the timing of electrical impulses across neurons, with memristors able to replicate these dynamics, facilitating local rules that scale up to global learning. Such mechanisms are reminiscent of quantum computing’s quantum annealing, where local interactions lead to significant global effects. This allows the construction of complex networks from simple rules, mirroring the brain’s ability to adapt and process information.

Sensory integration

Equally significant is the prospect of sensory integration in AI hardware. Taking a leaf from the brain’s book, future AI systems could integrate and process information from multiple senses. This approach holds immense potential for enhancing applications in robotics, autonomous vehicles, and IoT devices, enabling these systems to interact with the real world in more nuanced and sophisticated ways.

Kenyon says: “The concept [of neuromorphic computing] focuses on enhancing Edge Computing devices with greater intelligence, such as smart sensors in mobile phones and microcontrollers in cars, indicating a burgeoning industry poised for growth. This potential has garnered interest from various sectors, including the defence and security communities, who see value in low-power Edge Computing devices for their applications. The conversation extends to both Cloud Computing and Edge Computing, highlighting the broad spectrum of interest and application opportunities.”

Navigating ethical and societal terrain

With technological advancements come ethical, legal, and societal implications. The evolution of neuromorphic computing raises important questions and challenges, such as ethical concerns about AI consciousness and rights, and privacy issues. Addressing these concerns is crucial for the responsible development and application of brain-inspired AI hardware.

The potential impact of these developments spans various sectors, from healthcare to autonomous technologies, and the journey into the future of brain-inspired AI hardware calls for continued research, collaboration, and a thoughtful approach to the challenges ahead.

With each step, we move closer to unlocking new horizons in neuromorphic computing, inspired by the most sophisticated and mysterious organ known to us: the human brain.