Co-founded in 2018, CEO Rashid Galadanci embarked on this journey with a mission to democratise advanced vehicle technologies and enhance safety for all drivers. Alongside him, COO Marcus Newbury brought with him 14 years of expertise in customer sales and mobility financial lending. Guiding the technological aspect of Driver Technologies is CTO Ben Heller, whose background spans mobile, web, and server-side technologies. Together, they lead a team committed to providing a safer driving experience.

In this exclusive two-part Q&A with Electronic Specifier, Rashid Galadanci, Marcus Newbury, and Ben Heller talk to Sheryl Miles about their insights, experiences, and the collective vision that drives Driver Technologies forward towards a safer and more secure driving future for everyone.

In your own words, can you tell me about your background and how Driver feeds into this?

Rashid Galadanci: At an early age, I gained an appreciation for the benefits of advancing vehicle safety and technology as my dad was in a severe car accident in Nigeria that he thankfully survived due to having a Volvo, which is known for its safety features but is not common in the region. Over time, I began observing the inequality in access to new vehicles equipped with safety technology around the world.

Through connecting with my co-founders, Marcus Newbury and Michael Yatco, we founded Driver Technologies to make roads safe and accessible for everyone through the technology available in our pockets via a smartphone.

Marcus Newbury: Prior to joining Driver Technologies, I worked in direct customer sales and account retention management, as well as a decade in mobility-specific financial lending. I gained an understanding of acquiring and cultivating new relationships in the mobility sector that positioned me well to co-found Driver Technologies with Rashid and Michael. I’ve also known Rashid since we were five years old, so we’ve been able to continue our friendship as we grow the company.

Ben Heller: I love a good challenge, and Driver Technologies is a prime example of a mission-driven company that’s changing the standard for automotive safety by refusing to take “no” for an answer. Our mission starts with the assumption that we already have everything we need to keep drivers safe right in our pockets. When I heard what Rashid and Marcus were trying to achieve using mobile phones, I knew I had to help them realise their vision.

What are some of the key challenges with vehicle safety?

MN: One of the top challenges for consumers is the average age of a car on the road is over 12.5 years old, which means it’s not equipped with the advancing technology available in newer cars. Additionally, the main challenges with hardware dash cams for fleets and consumers are the upfront cost and then having to purchase a new one every few years to have the most up-to-date technology.

How is Driver evolving to meet that need?

RG: To combat the inequality in accessing new automotive technology, we developed the Driver app in 2018 as a non-hardware-based dashcam and safety app via your smartphone that is designed to constantly evolve. Creating an app allows us to constantly update our technology without having to purchase a new device every time.

Our mission is to democratise road safety; therefore, the basic version of our app is free, and our premium version is currently only $4.99 a month. We also offer customised solutions for fleets to outfit fleets with designated phones or tablets to run the Driver dash cam app, record the fleet’s trips, and analyse the data through our analytics platform, Driver Cloud. It can also optimise the fleet’s existing devices to run through the Driver Cloud for easy analysis and incident sharing.

Could you tell me about the technology you use? Sensors, vision systems, security, etc?

BH: We pride ourselves in leveraging every smartphone capability you’ve heard of, and probably some you didn’t even realise you carried around with you all day. Normally, if you’re sending an email or a text message, you’re using but a small fraction of the capabilities you have at your disposal. Driver uses the accelerometer, gyroscope, GPS, CPU, GPU, and (on iOS) Apple Neural Engine chips to provide a detailed view into your vehicle’s motion trajectory while simultaneously recording and processing video frames using specialised computer vision models right on the device for real-time safety alerts.

How does the collision avoidance system work and what kind of situations can it detect?

RG: The Driver collision avoidance system is a single-device camera that allows us to understand the field of view, which includes a wide-angle view of the road to see both the left, right, and centre of the vehicle. The goal is to give the driver useful information to alert them if there’s an object in front of them that they’re approaching rapidly relative to their state of acceleration or deceleration while not distracting them. If a driver is slowing down, we know that they will see the object that’s in front of them when we know that they’re reacting. If the driver is not slowing down fast enough, then we’ll go ahead and provide an alert called a traditional forward collision alert, meaning the car in front of them started breaking, and the driver is not reacting.

BH: We also provide a tailgating alert, which is specifically focused on addressing bad driving behaviours that might have solidified into a habit. Tailgating is not necessarily an activity that will immediately result in an accident, but our driver scoring analysis shows that it could lead to one, which is why we monitor and alert the driver to stop.

From there, we fuse these alerts with what’s happening inside the vehicle by using the interior camera to monitor whether the driver is looking at the road. We can alert a driver if they’re distracted or drowsy and adjust the sensitivity of the outward-facing alerts. For example, if you’re looking away from the road, we’ll tell you immediately if we detect a tailgating activity, but if you’re attentive and decelerating, we will give you time to react before we send an alert. This is all performed locally on the device in order to protect the privacy of our drivers and speaks to the remarkable capabilities of mobile phones as real-time safety hardware.

Do you use sensors or AI algorithms to detect potential collisions?

BH: We leverage a proprietary algorithm that makes use of all the available sensor data to understand whether the driver is in danger or potentially in a near-accident scenario. We do this in both real-time and after the fact to study various driving behaviours, which encourages a healthy feedback loop between the driving behaviour of our users and our ability to identify high-risk situations.

MN: Sometimes we’re surprised by things that we wouldn’t normally flag as unsafe but actually do result in accidents. Our team is constantly tweaking those algorithms and retraining our models to have an increasingly complex understanding of what it means to be in a real-world driving scenario. From this data, we’re able to continue our research from anonymised road safety data and implement it in a simulated environment to understand, in a black box, what a non-human driver would do in this situation.

Can the sensitivity of the forward collision warning system be adjusted to suit different driving conditions?

BH: Yes, we can adjust the sensitivity of the forward collision warning system situationally on the fly, but we can also adjust based on a particular driving environment. If you’re somebody like me who tends to be in high-traffic urban environments, you may want your default setting to be low. When that baseline setting then gets automatically scaled by the system, you’re toggling between a low and a medium setting based on your level of attentiveness. If you’re in a really rural environment and there aren’t a lot of cars around, you may elect for a higher baseline sensitivity.

RG: We really want this to be a system that’s appropriate for a lot of different types of drivers, so we are working towards a feature set that will automatically determine the environment and set that for you based on the number of other vehicles and pedestrians that we see on the road. Pretty soon, we’ll be inferring that default sensitivity from the context around the driver.

Are there any specific criteria or thresholds that trigger the forward collision warning alerts?

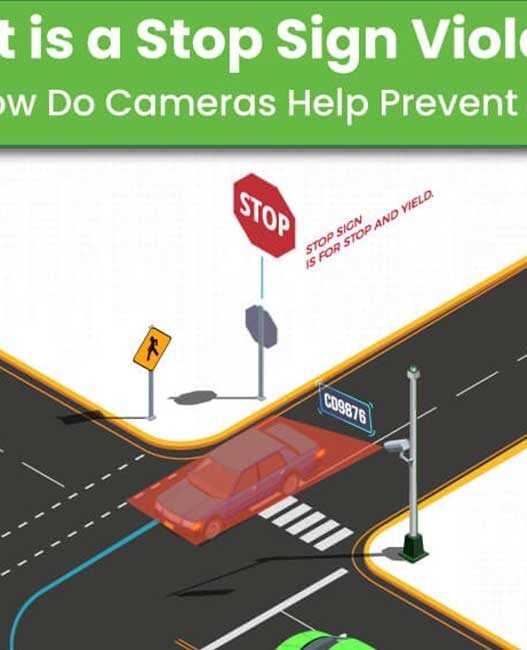

BH: Any time we detect that the time to impact is less than the driver’s available reaction time (based on attentiveness, speed, and other factors), we’ll throw an alert. This can happen at a single point in time (e.g., driving at a parked car and failing to break at the expected distance) and also over a period of time (e.g., traveling closely behind another vehicle at increasingly high speeds). We are also beginning to introduce more contextually aware alerts based on our knowledge of street signs and other road-based situations.

For example, we have a cloud-based model that we’re going to be moving to the edge that alerts when running stop signs. There are a number of interesting new alert classes based on our understanding of situational awareness around the vehicle, which have to do with lane changes and other aspects of what is considered a legal versus illegal driving manoeuvre. We started with a notion of safety while building our forward collision warning alerts, and now we’re moving towards a more nuanced model that combines safety and liability.