AI has advanced to the stage where it can now make fundamental improvements to a criminal organisation’s business model, and as such, AI techniques are likely to be adopted by criminal enterprises with increasing regularity.

With that in mind Palmer highlighted some use cases where AI or machine learning has real potential to improve the profitability of a criminal enterprise. “Or to put it another way, this is my thesis for a not very ethical, highly illegal but extremely high-growth startup,” he joked.

Methods of attack

Despite the perception that cyber security doesn’t seem to be making any progress, things have improved over the last few years. Critical short lived vulnerabilities aside (software bugs like Heartbleed and Shellshock), we are long past the point when a teenager in their bedroom can just type ‘FIREWALL OFF’ and get through an organisation’s defences and cause havoc.

Instead, the primary method that enables criminals to infiltrate an organisation is by posing as imposters – pretending to be someone who that organisation, company or individual knows or trusts. Even super-hardened nuclear power stations or cloud data centres need human beings somewhere within their operations, and those people can still be tricked. Palmer added: “It doesn’t matter what your investment is in technology, attacking the people is often the easiest way into an organisation.”

We are all used to receiving spam emails asking us if we’re looking for love, or from an African prince seeking investment, and we’ve been trained not to click on them. These types of attack don’t fool too many people these days, however, things are becoming more sophisticated.

“As an example, I have received an email recently that appeared to come from a colleague,” Palmer continued. “It covered a topic that we had conversed about verbally (rather than digitally) the previous day, when we walked from the front door of my office to a coffee shop around the corner.

“We strongly suspected that from this, and a couple of other incidents that happened on the same day, that there was someone hanging around outside our offices with photos of people taken from the website, listening to people’s conversations and then sending tailored attacks.”

Palmer was fortunate as he had noticed mistakes in the email such as the spelling of certain words, and some issues with the signature at the bottom. “However, the primary reason I didn’t click on the email was that in reality, it was just too polite,” he added. “I knew for example, that my colleague wouldn’t put a smiley face emoji at the end of the email! So I immediately knew something wasn’t right.”

This sort of human-led attack is much more likely to be successful than those basic spam emails that we are all historically used to receiving. However, this method is very expensive. Criminals can’t get to internet-scale by hanging around outside someone’s place of work or house in order to personalise some spam emails.

AI can really help the criminals in this regard however, and it’s very likely that we will see machine learning and AI techniques used to tailor spear phishing attacks. Palmer continued: “Imagine a piece of malicious software on your laptop that can read your emails, diary, messages, chat etc, and as well as being able to read, it starts to conceptually ‘understand’ the content of that traffic and its context.”

Criminals will be able to use that software to understand how you communicate with the different people in your life. How you talk to your partner will be very different to how you talk to your boss, which will be different to how you talk to your customers. Once that context has been established the software can start faking communications in a way that’s very likely to be followed-up on.

Palmer added: “We’re now getting to the point where people are likely to click on these malicious emails, if they sound like they’ve come from a trusted source and they are contextually relevant based on how we’ve interacted with certain individuals in the past.”

Palmer stressed that the industry is currently not sure how that sort of attack can be blocked. We may feel that these techniques are a long way of, however, in truth they are relatively imminent. There are already some interesting technologies out there that are moving AI forward. For example, there are bot calendar assistants designed to take all the pain out of organising meetings, where individuals converse in natural language, the bot understands and suggests available diary slots and sends out an invite.

“This is not that different to the level of understanding required to start spear phishing,” Palmer added. “All the criminals would need to do is establish the personalisation element so that the email sounds like how the target would normally interact with a certain individual. And anyone who has come across Twitter bots or Facebook bots will know that this sort of personalisation is already out there and can be easily replicated.”

Palmer explained that there are certain university course modules (which you can undertake in your pyjamas sat on your sofa via distance learning) that will give criminals all the skills they’d need in order to nail the pieces of code together to achieve this type of attack. “These sort of attacks are relatively imminent, you won’t need to have a PhD in order to pull them off, and it will enable massive scaling for the criminals, so why wouldn’t they do it.”

Foot in the door

So as AI progresses and becomes more sophisticated, how will it influence illegal activity once the criminals have actually infiltrated an organisation? Certainly conventional data theft isn’t going to go away and there are still a huge number of breaches that are still occurring. However, as well as stealing data from our databases, cloud or on-premise stores, we’re also going to move into an era of ambient data theft.

“Workplaces and organisations are starting to take advantage of the microphones that are lying around within devices. It’s become quite apparent recently that there are quite a number of people listening behind the scenes to what we say to Alexa, and we’re also hearing stories that the CIA are targeting smart TVs to eavesdrop on people in their living rooms. However, many people take the view that ‘all I’m doing in my living room is watching Game Of Thrones’ so who cares.”

The risk balance of this sort of activity in the workplace is very different however. And despite this organisations and businesses are not really having that conversation in the same way that we are in regards to the Alexa in our kitchen or the smart TV in our living room.

Criminals are already attempting to hack video conferencing units and smart TVs in law or PR firms for example, an environment where quite often clients will tell the company something out loud that’s confidential. As a criminal you don’t have to go out and steal the data from laptops, you merely have to hack an audio device and eavesdrop on the conversation.

This sort of hack already goes on, but in the same way as hanging around outside an office, this technique doesn’t scale very well. “Criminals don’t want to spend all their time eavesdropping on hours and hours of board meetings,” commented Palmer. “What changes the balance here is the enormous amount of progress we’ve seen in speech-to-text AI.

“It’s something that’s been infuriating for years, and not quite been good enough. However, there have been significant improvements over the last six to 12 months.” As a consequence criminals no longer have to covertly sit in on these meetings. Following the hack on the audio system it can then be streamed out to a speech detection system and search for pertinent pieces of information – whether that’s undisclosed M&A activity or items from a particular law suit that could be used for extortion or sold to the other side of the case etc.

“And they won’t have to write these techniques themselves,” Palmer added. “Google, Amazon, Alibaba and Microsoft all offer these types of service. They’re non-real-time but are far more powerful than what we’ve traditionally been used to, and are almost certainly good enough to enable these types of attacks.”

Palmer also explained that we could even see a more targeted situation where the audio attack is only activated when a certain person enters the room. This could incorporate the use of facial recognition, audio detection, and even sensing when particular animated emotions are being displayed within the meeting.

And Palmer highlighted that these sort of events are also imminent. We’ve all seen spy movies where agents use technology to eavesdrop on sensitive conversations, but it’s now becoming really simple and easy for run-of-the-mill criminals. And we are relatively unprepared for it. The unfortunate truth is that none of us are ready for an environment where we have to be cautious about how we have verbal conversations.

“I used to work for the UK intelligence services,” he added. “And when you work in that environment it gets ingrained in you to ask whether a particular space is safe to have a classified conversation. However, I’m pretty sure none of us in our day-to-day jobs think like that, and neither do the majority of board level management.”

Widespread harm

So as a criminal how would you cause widespread harm to an organisation? Oil rigs and oil and gas companies for example, have a lot of enemies, and are always worried that they could be hacked and assets could be sabotaged.

From a company perspective, they are in a tricky position in regards to cyber security. They are geo-politically interesting i.e. they could have unwanted attention from very well-funded nation state organisations, and they are certainly going to have the attention of environmental groups, and individual hacktivists. “So regardless of the morality of their overall work, when it comes to protecting their business, they are going to have their hands full,” said Palmer.

However, he warned against any criminals wanting to harm an oil and gas company to target an actual oil rig. Even if hackers did manage to essentially ‘turn off’ an oil rig, someone is going to notice and there are going to be manual overrides on those systems which will be back up and running again fairly quickly.

“Instead I would change the underlying geophysical survey data that resulted in the oil rigs being built in that specific location in the first place,” he added. “And this may also influence how the organisation bids for drilling and mining rights. This would be far more effective. If you were able to get all the oil rigs in a certain project sent to the wrong location and they came up dry as a consequence, the long term harm that this would do to the organisation is going to be far more significant.”

With this in mind it’s therefore unsurprisingly that this data is very well-defended. It’s almost certainly going to be in the cloud or in an on-premise data centre, so it’s going to be very difficult to sneak any code into these environments. So Palmer suggested that rather than attack the data at the point of storage, hackers would be better off trying to attack the data at the point of collection.

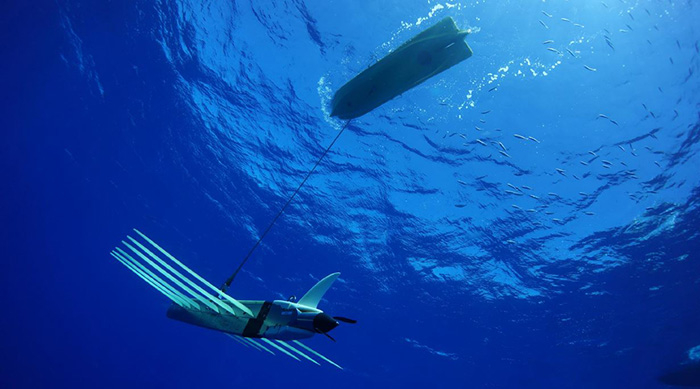

He added: “When underwater data mapping is being undertaken in the oil and gas industry, fleets of tens or hundreds of submersibles are towed in massive shoals, creating an incredible picture of the sea floor (see picture below). The maths to prevent them getting tangled up in the huge shoals they travel in is pretty impressive on its own, let alone the underlying geo-sensing data involved.

“However, I guarantee that no matter how smart these pieces of equipment are, at the end of the day they are small IoT devices that probably won’t be running anti-virus or have any high-end cyber security protection. So if we’re going to try and influence the decision-making process of an oil and gas company, I would propose attacking at the edge rather than the centre.”

As such, if criminals set up attacks at the edge and want to alter the data flowing through hundreds of sensors before it’s uploaded to the cloud or the data centre, then they are not going to be able to use linear programming techniques to achieve the subtle changes that would be required. So machine learning on this sort of data revision while it’s in transit is the way to go about the attack.

Held to ransom

Another area of cyber security where AI will potentially give criminals a leg-up is ransomware – the extortion of individuals or companies by making their data or systems unavailable. Most large organisations that suffer a ransomware attack these days restore their data from backup rather than pay up. Very few large organisations pay ransomware anymore, so most of the revenue that is generated from this type of attack comes from smaller organisations that didn’t have the right backups in place or from individual consumers.

Palmer added: “I don’t think we will we see AI supercharging ransomware attacks in the future to make them more sophisticated. There have been some claims made around this, but fundamentally ransomware is just a very fast moving attack. Typically even large organisations can be totally infected within around two minutes, if they are vulnerable.

“So why would you employ AI – it’s not going to make the attack any quicker, it doesn’t need to be clever and it’s essentially a smash and grab type of attack. But if criminals are having difficulty in getting people to pay up when the ransomware takes hold, perhaps AI can help in this instance.”

Palmer explained that when it comes to smaller organisations and consumers paying up, not very many know their way around a Bitcoin wallet or other crypto-currencies in order for them to actually pay the ransom.

Therefore, what is happening in the criminal business model is that they have started setting up human call centres where you can talk to someone in your native language who is a front for a group of criminals, who will talk you through how to obtain some digital currency, pay the ransom and get your computer unlocked or your data restored. Palmer joked: “The customer service at these call centres is often more helpful than your average mobile phone provider.”

Of course, these people represent a risk for the criminals. If a criminal organisation is employing dozens or hundreds of people to work in call centres undertaking illegal activity, there’s a much greater risk for the criminal enterprise that someone is going to get caught and forced to give evidence.

Like most businesses that run a lot of call centres, the criminals will start using bots in order to support individuals on their way through acquiring digital currency and paying up their ransom. However, rather than the bots we’re used to seeing on travel booking websites for example, we are likely to see a great deal more progress in this field.

“Something like Little Bing for example, which is only available in India, Indonesia and China, is made by Microsoft in the region,” added Palmer. “It’s amazing. It has been modelled on a 17 year old girl and seeks to create an emotional attachment to the person it is chatting with. It’s got around 660 million registered users on 40 different types of chat systems. It has its own TV show, it writes poetry and can even read children’s books using the correct tones etc. having extracted the meaning from the text.

“And if we’ve already got chatbots that are this successful, I would absolutely be using this in my call centre for illegal activity if it means they don’t have to employ actual people. It’ll also have much better operational security than the people you would be employing in your call centre.”

“AI has again got the criminals’ back here. Microsoft, for example, has just introduced an AI-led software testing service. It’s pretty spectacular. You can upload any computer program in the world, and it will go off and find all the bugs for you. Microsoft use it themselves to locate bugs within Windows and has been very successful.”

The reason that AI is so useful here is that, rather than explore everything that a program could potentially ever do, it learns every time it discovers a potential attack, which makes it far more efficient at finding attacks in the future.

So every time that anyone anywhere in the world uses this service, it gets smarter and more effective. However, it’s completely impossible to put safeguards in place to stop people uploading other people’s programs. So within a criminal enterprise they could upload any program that is used in a typical business, and get a list of all the bugs that they could potentially find useful for attacks.

Palmer continued: “It’s a brilliant tool and will definitely be used by the good guys to make their software better, but that is only useful if every organisation is doing all their updates, all the time. Unfortunately, in reality we know that’s not the case.”

In summary, this article offers a manifesto for a criminal business model. We now know how to get inside an organisation, we’ve discussed different mission types that we can execute inside of organisations. And we can employ call centres if we need them, discover bugs and ultimately make a lot of money.

The techniques discussed here are very likely to manifest themselves in business over the next few years because, crucially, they enable a much greater scale of operation within criminal business models. They don’t just incorporate AI because it’s just an industry buzzword, they are good techniques that will make criminals rich.

The most important thing any of us can do as individuals is update our software and have better passwords. And when it comes to higher risk individuals who are dealing with information that should genuinely be kept secret, then this issue is going to become more and more important.

“Criminals still go after the crimes that are easiest to make money from,” concluded Palmer. “It’s getting harder and harder to hack your iPhone for example, but the smart TV sat in the corner or video conferencing unit has probably never been updated, won’t have a security team attached to it, and may well still have the original password attached to it that it had when it left the factory, and therefore is a very easy target.”

As the saying goes, ‘keep you friends close and your enemies closer’. This article serves to really put us in the minds of the cyber criminals to see what sort of techniques they’ll be using in the coming years to try and launch attacks against our sensitive information. And if we know their methods, we can formulate a plan to stop them.