Paige Hookway at Electronic Specifier takes a look at the top 5 AI products released in November 2025.

Microchip MCP server to power AI-driven product data access

Microchip Technology has announced the launch of its Model Context Protocol (MCP) Server. An AI interface, the MCP Server connects directly with compatible AI tools and large language models (LLMs) to provide the context these systems need to answer questions.

Through simple conversational queries, the MCP Server enables users to retrieve verified, up-to-date Microchip public data, including product specifications, datasheets, inventory, pricing, and lead times.

Next-generation power solutions for AI data centres

Flex Power Modules, in partnership with Renesas, delivers advanced board-mounted power management solutions tailored for AI workloads.

These Voltage Regulator Module (VRM) and Vertical Power Supply (VPD) products are designed for CPUs, GPUs, FPGAs, ASICs, and accelerator cards, offering fast transient response, high efficiency, and strong thermal performance.

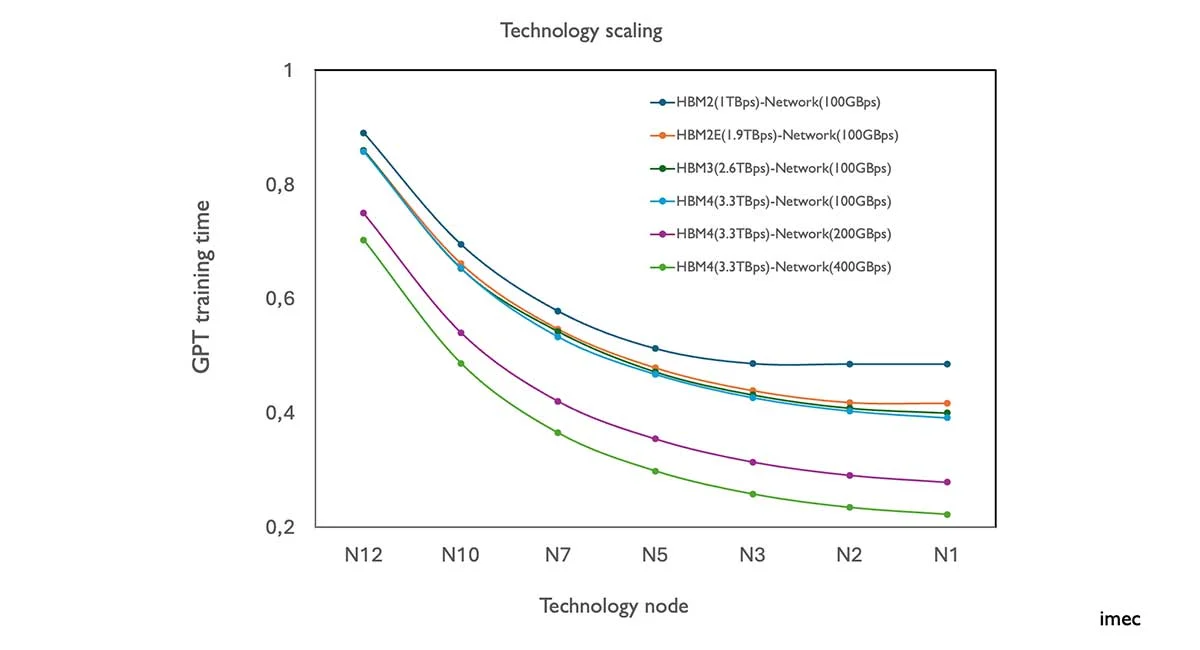

imec unveils a tool for AI datacentre design and optimisation

Ai Super Computing 2025, imec announced the launch of imec.kelis, an analytical performance modelling tool designed to revolutionise the design and optimisation of AI data centres. Early adopters are already experimenting with the tool, signalling strong market interest.

The AI datacentre landscape is undergoing rapid transformation. As workloads scale to trillions of parameters and energy demands surge, system architects face mounting pressure to balance performance with sustainability and cost. Traditional simulation methods are often slow, opaque, or too narrow in scope. Imec.kelis addresses this gap by offering a fast, transparent, and validated modelling framework that enables informed decision-making across the full stack – from chip to datacentre. It empowers teams to explore architectural trade-offs, optimise resource allocation, make informed decisions, and accelerate innovation in a field where time-to-insight is critical.

Breakthrough CXL memory solution for AI workloads

As enterprises accelerate adoption of large language models (LLMs), Generative AI, and real-time inference applications, a new bottleneck has emerged: memory scale, bandwidth, and latency.

XConn Technologies and MemVerge have announced a joint demonstration of a Compute Express Link (CXL) memory pool designed to break through the AI memory wall.

STMicroelectronics expands Model Zoo to accelerate physical AI

STMicroelectronics has unveiled new models and enhanced project support for its STM32 AI Model Zoo to accelerate the prototyping and development of embedded AI applications.

This marks a significant expansion for what is already the industry’s largest library of models for vision, audio, and sensing to be embedded in equipment such as wearables, smart cameras and sensors, security and safety devices, and robotics.