This has led to a growing need for smaller more efficient models – enter Small Language Models (SLMs).

These smaller models are designed to perform language-related tasks while requiring significantly fewer resources. Unlike their LLM counterparts, these models do not aim for broad generalisation across multiple domains, SLMs are instead optimised for efficiency, making them far superior for applications where speed, privacy, and cost-effectiveness are of top priority.

Here, we will explore what SLMs are, how they differ from LLMs, and the key use cases driving their increasing adoption. SLMs are rapidly emerging as a practical solution for real-world applications they demand lightweight, scalable, and privacy-preserving AI, especially at the Edge.

What are Small Language Models (SLMs)?

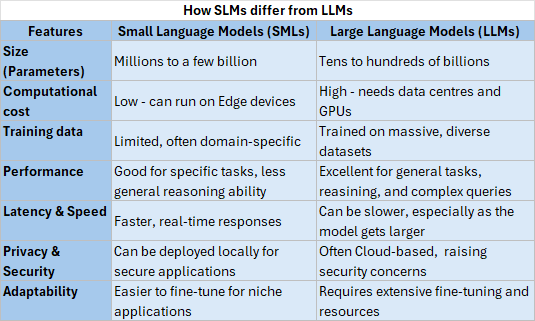

SLMs are a category of AI models designed to perform natural language processing (NLP) tasks with significantly fewer computational resources than LLMs. While LLMs, such as your GPTs, Gemini’s, or otherwise, often contain tens or hundreds of billions of parameters, SLMs typically operate within the millions or a few billion parameters, the optimum capability-efficiency balance point.

Unlike their larger counterparts, SLMs are optimised for speed, lower energy consumption, and targeted use cases. They can run on Edge devices, personal computers, and even embedded systems without requiring the high-end GPUs or cloud infrastructure that LLMs depend on.

Key characteristics of SLMs:

- Compact size – SLMs contain fewer parameters, reducing memory and processing requirements while maintaining functional accuracy for specific tasks.

- Efficient computation – They require less power and computing resources, enabling deployment on local devices without reliance on extensive cloud computing.

- Task-specific optimisation – Unlike general-purpose LLMs, SLMs are often trained for specific applications, such as legal document summarisation, medical text analysis, or chatbot functionalities.

- Low latency – Due to their smaller size, SLMs can process and generate responses faster than LLMs, making them suitable for real-time applications.

- Privacy-focused deployment – SLMs can be deployed locally, ensuring sensitive data does not have to be processed in the cloud, an essential feature for industries like finance and healthcare.

While SLMs do not match LLMs in terms of broad generalisation and deep contextual reasoning, their efficiency and practicality make them an attractive solution for organisations seeking cost-effective and privacy-conscious AI implementations.

Where are SLMs deployed?

The efficiency, lower computational cost, and privacy advantages of Small Language Models (SLMs) make them well-suited for a variety of applications. While Large Language Models (LLMs) are designed for broad, general-purpose tasks, SLMs excel in specialised, resource-constrained, and privacy-sensitive environments. Below are some of the key areas where SLMs are proving to be valuable.

Edge AI & on-device applications

SLMs are lightweight enough to run on smartphones, tablets, IoT devices, and embedded systems without requiring an Internet connection. This enables low-latency AI processing directly on a device, enhancing user experience and reducing reliance on Cloud infrastructure. These might include:

- Smart keyboards with predictive text and grammar correction.

- On-device voice assistants that operate without sending data to external servers.

- Offline speech-to-text transcription and real-time translation.

Enterprise and industry-specific AI

Many industries require AI models tailored to domain-specific tasks rather than general-purpose language processing. SLMs can be fine-tuned on industry datasets to provide highly relevant insights and automation. Some example industries:

- Healthcare: AI-powered medical note summarisation and diagnostic assistance that runs locally on hospital systems.

- Finance: Secure financial chatbots that help customers manage accounts without sending sensitive data to external servers.

- Legal: Contract analysis and summarisation tools that help legal professionals process documents efficiently.

Privacy-first AI solutions

Organisations dealing with confidential or sensitive data are increasingly turning to SLMs, as they allow AI processing to be performed locally or on private servers, ensuring data security and compliance with regulations like GDPR. Example areas where this can be used:

- Healthcare AI assistants that process patient records without transmitting them to the cloud.

- Corporate AI chatbots that handle internal queries without exposing company data to third-party AI providers.

Cost-effective AI deployment for business

Running large-scale AI models can be expensive due to the computing power required. SLMs offer a more affordable alternative, making AI-driven automation accessible to small and medium-sized enterprises (SMEs). These SMEs might utilise it through:

- AI-driven customer support bots for SMEs that reduce reliance on human agents.

- E-commerce recommendation engines that suggest products without high Cloud-computing costs.

Low-latency applications & real-time processing

SLMs can operate with significantly lower response times than LLMs, making them ideal for applications where real-time processing is crucial. For example:

- AI-powered customer service bots that provide instant responses without delays.

- Robotics and automation, where AI models must react to commands in milliseconds.

- Augmented Reality (AR) and Virtual Reality (VR) assistants that enhance user interaction in real-time.

Multilingual & localised AI

Unlike LLMs, which prioritise widely spoken languages, SLMs can be fine-tuned for niche languages, dialects, and regional requirements, making AI more inclusive and accessible worldwide. These can be applied to:

- Localised customer support bots in underrepresented languages.

- AI-powered translation models optimised for specific regional dialects.

- Speech-to-text models tailored for localised accents.

Specialised research & scientific applications

SLMs are also being used in research fields that require domain-specific knowledge and AI-driven insights without the need for large-scale models. For instance:

- AI-assisted drug discovery that processes scientific literature and research data.

- AI-powered climate modelling that analyses environmental data efficiently.

- AI code assistants that help developers generate or debug code within specific programming environments.

Challenges and limitations of SLMs

While SLMs offer significant advantages in efficiency, cost, and privacy, they also come with inherent limitations that affect their performance and applicability. One of the main drawbacks is their limited ability to generalise across diverse topics. Unlike LLMs, which can process a broad range of queries and generate human-like responses across multiple domains, SLMs are often optimised for specific tasks. This means they may struggle with unfamiliar inputs or complex reasoning that extends beyond their training data.

Another challenge is their reduced contextual understanding. Due to their smaller parameter count and lower memory capacity, SLMs have difficulty processing long contextual dependencies in text. This makes them less effective for tasks requiring deep comprehension, such as multi-turn conversations or highly nuanced text generation.

Similarly, because they are trained on smaller datasets, they tend to have lower accuracy when handling open-ended tasks. While they perform well for targeted applications, they may struggle with broader, more abstract problem-solving or tasks requiring extensive world knowledge.

Smaller training datasets also mean that SLMs are more susceptible to biases. If the training data is not sufficiently diverse or well-balanced, the model may reinforce certain biases, leading to misinterpretations or inaccuracies, particularly in sensitive fields such as law, healthcare, and finance. This issue is further compounded by the fact that SLMs require careful fine-tuning to perform well in specific use cases. Unlike LLMs, which can generalise effectively without extensive customisation, smaller models often need additional training or domain-specific data to achieve optimal results. This adds to the complexity of their deployment, requiring expertise in fine-tuning techniques and dataset curation.

While SLMs are designed to be lightweight and efficient, they inevitably come with performance trade-offs. The lower computational requirements result in faster response times, but this often comes at the expense of accuracy, especially in tasks that demand high-level abstraction, nuanced decision-making, or cross-domain expertise. Additionally, the growing presence of mid-sized models—those with parameter counts between five and ten billion—poses a competitive challenge. These models strike a balance between efficiency and capability, potentially reducing the demand for extremely small models in certain applications.

Despite these limitations, SLMs remain a valuable tool where efficiency, privacy, and real-time processing are priorities. As AI research progresses, advancements in model compression, knowledge distillation, and training optimisation may help address some of these challenges, further expanding the role of SLMs in AI-driven applications.