Super Micro Computer showcased its advanced AI infrastructure solutions at NVIDIA GTC in Washington, D.C., highlighting systems tailored to meet the stringent requirements of federal customers.

Supermicro announced its plans to deliver next-generation NVIDIA AI platforms, including the NVIDIA Vera Rubin NVL144 and NVIDIA Vera Rubin NVL144 CPX in 2026. Additionally, Supermicro introduced US-manufactured, TAA (Trade Agreements Act)-compliant systems, including the high-density 2OU NVIDIA HGX B300 8-GPU system with up to 144GPUs per rack and an expanded portfolio featuring a Super AI Station based on NVIDIA GB300 and the new rack-scale NVIDIA GB200 NVL4 HPC solutions.

“Our expanded collaboration with NVIDIA and our focus on US-based manufacturing position Supermicro as a trusted partner for federal AI deployments. With our corporate headquarters, manufacturing, and R&D all based in San Jose, California, in the heart of Silicon Valley, we have an unparalleled ability and capacity to deliver first-to-market solutions that are developed, constructed, validated (and manufactured) for American federal customers,” said Charles Liang, President and CEO, Supermicro. “The result of many years of working hand-in-hand with our close partner NVIDIA – also based in Silicon Valley – Supermicro has cemented its position as a pioneer of American AI infrastructure development.”

Supermicro is expanding its latest solutions based on NVIDIA HGX B300 and B200, NVIDIA GB300 and GB200, and NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs that provide unprecedented compute performance, efficiency, and scalability for key federal government workloads such as cybersecurity and risk detection, engineering and design, healthcare and life sciences, data analytics and fusion platforms, modelling and simulation, and secure virtualised infrastructure.

Supermicro’s focus on US-based manufacturing is a cornerstone of its business focus. All government-optimised systems are developed, constructed, and rigorously validated at its global headquarters in San Jose, California, ensuring full compliance with the TAA and eligibility under the Buy American Act. This domestic production capability enhances supply chain security and meets federal requirements for trusted, high-quality technology solutions.

Through its collaboration with NVIDIA, Supermicro is set to introduce the NVIDIA Vera Rubin NVL144 and NVIDIA Rubin CPX platforms in 2026. These platforms will deliver exceptional AI training and inference performance of their predecessors, empowering organisations to handle complex AI workloads with exceptional efficiency.

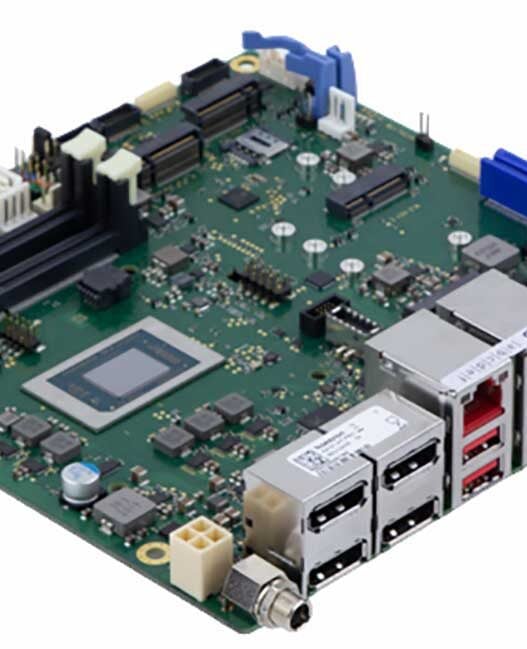

Supermicro is also unveiling its most compact system, a 2OU NVIDIA HGX B300 8-GPU server, featuring an OCP-based rack-scale design powered by Supermicro data centre building block solutions. This architecture supports up to 144GPUs in a single rack, delivering outstanding performance and scalability for large-scale AI and HPC deployments in government data centres.

Supermicro is expanding its government-focused portfolio by optimising for NVIDIA AI Factory for Government reference design. The NVIDIA AI Factory for Government is a full-stack, end-to-end reference design that provides guidance for deploying and managing multiple AI workloads on-premises and in the hybrid cloud while meeting the compliance needs of high-assurance organisations.

The portfolio now includes the Super AI Station based on NVIDIA GB300, and the rack-scale NVIDIA GB200 NVL4 HPC solutions, both optimised for federal environments with enhanced security, reliability, and scalability to meet stringent government standards.

Support for new accelerated networking

Further highlighting Supermicro’s first-to-market development with new NVIDIA technologies, it also announced support for the newly announced NVIDIA BlueField-4 DPU and NVIDIA ConnectX-9 SuperNIC in gigascale AI factories. When available, these new accelerated infrastructure technologies will be readily integrated into new Supermicro AI systems to provide faster cluster-scale AI networking, storage access, and data processing offload for the next generation of NVIDIA AI infrastructure. Supermicro’s modular hardware design will enable the rapid integration of new technologies such as the NVIDIA BlueField-4 and NVIDIA ConnectX-9 into existing systems designs with minimal re-engineering, speeding up time-to-market and reducing development costs.

New Super AI Station brings AI server power to the desktop

Continuing its record of first-to-market implementation of new NVIDIA technologies, Supermicro announces the new liquid-cooled ARS-511GD-NB-LCC Super AI Station. By bringing the high-end server-grade GB300 Superchip into a deskside form factor, this platform is the first of its kind, unleashing unparalleled performance and resulting in more than 5x AI PFLOPS of computing power, compared to traditional PCIe-based GPU workstations. This new Super AI Station is a complete solution for AI model training, fine-tuning, applications and algorithms prototyping and development, that can be deployed on-prem for unmatched latency and full data security, supporting models up to 1 trillion parameters. This self-contained platform is ideal for government agencies, startups, deep-tech, and research labs who may not have access to traditional server infrastructure for AI development purposes and are unable to leverage cluster-scale or cloud AI services due to availability, cost, privacy, and latency concerns.

The Super AI Station can be used in a desktop or rack-mounted environment and is delivered as a fully integrated, all-in-one solution, including:

- NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip

- Up to 784GB of coherent memory

- Integrated NVIDIA ConnectX-8 SuperNIC

- Closed-loop direct-to-chip liquid cooling for CPU, GPU, ConnectX-8, and memory

- Up to 20 PFLOPS AI performance

- Bundled with NVIDIA AI software stack

- Option to configure an additional PCIe GPU for rendering and graphics acceleration

- 5U desktop tower form factor with optional rack-mounting

- 1600W power supply compatible with standard power outlets

- Rack-Scale GB200 NVL4 GPU Accelerated HPC and AI Solution Now Available

Supermicro is also announcing general availability of its ARS-121GL-NB2B-LCC NVL4 rack-scale platform for GPU-accelerated HPC and AI science workloads such as molecular simulation, weather modelling, fluid dynamics, and genomics. Delivering revolutionary performance through four NVIDIA NVLink-connected Blackwell GPUs unified with two NVIDIA Grace CPUs over NVLink-C2C, up to 32 nodes per rack can be connected via NVIDIA ConnectX-8 networking for up to 800G per GPU. The solution is scalable at the system and rack level, depending on workload requirements and can be liquid cooled by either in-rack or in-row CDUs.

- Four B200 GPUs and 2 Grace Superchips per node with direct-to-chip liquid cooling

- Four ports of 800G NVIDIA Quantum InfiniBand networking per node, with 800G dedicated to each B200 GPU (alternative NIC options available)

- Up to 128GPUs in a 48U NVIDIA MGX rack for unmatched data centre rack density

- Power via busbar for seamless scaling

These Supermicro systems are ideal for developing and deploying AI using NVIDIA AI Enterprise software and NVIDIA Nemotron open AI models.