“The autonomous world – a world of machines that anticipate, automate, sense, think, and collaborate – is no longer science fiction,” Hinrichsen began. “It is on the cusp of becoming reality, and it will affect all of us.”

He then went on to give an overview of how AI has evolved, how it is changing the Edge, and what this means for the future of engineering, infrastructure, and safety in the real world.

The data deluge, and why the Cloud is not enough

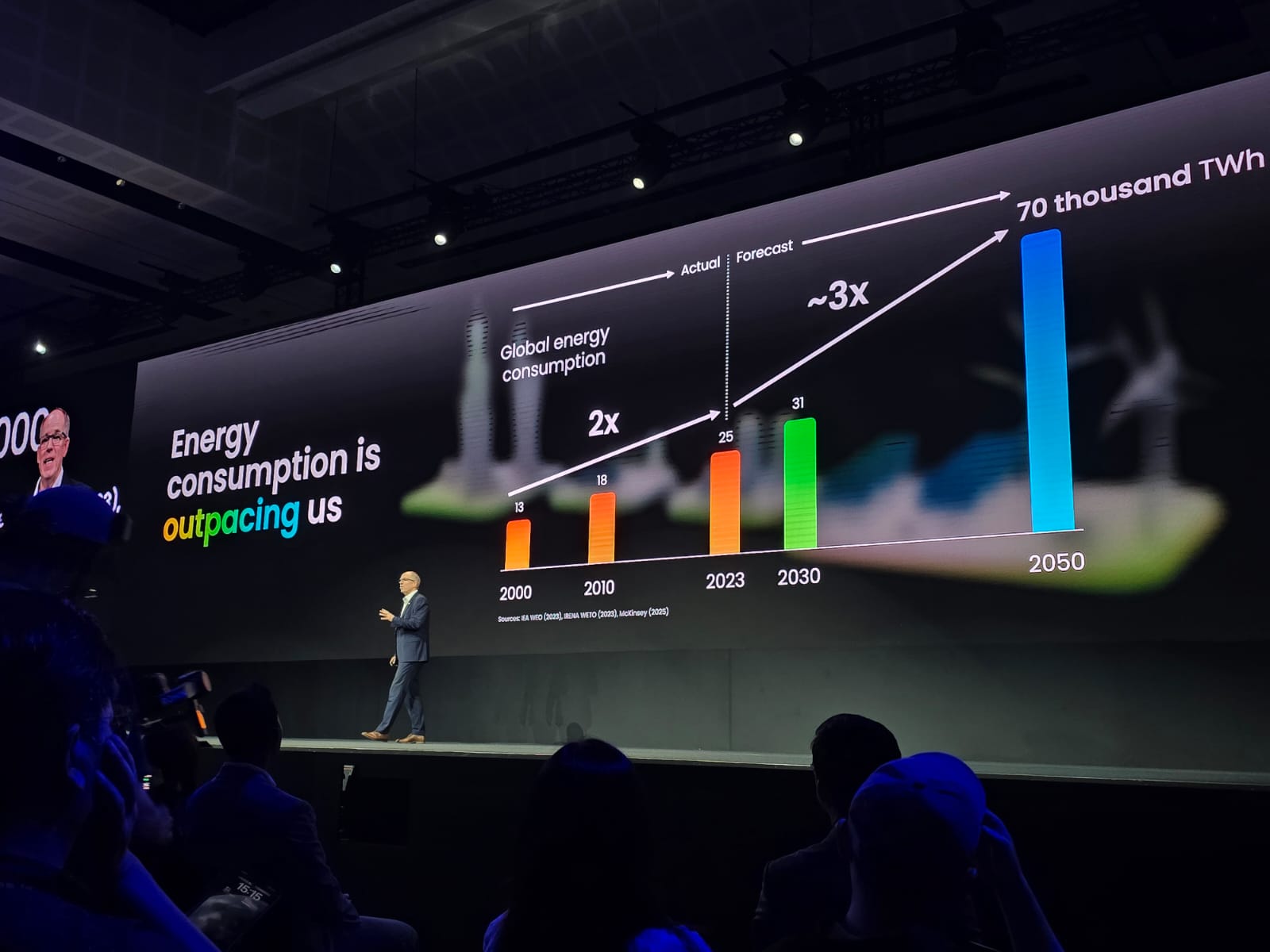

Talking about the sheer amount of data amassed through daily interactions with technology, such as connected vehicles, wearables, smart homes, and smart cities, Hinrichsen said that global data generation is expected to reach 180 zettabytes (a trillion gigabytes) this year. “We have created more data in the last three years than in our entire human history,” he said.

However, he also pointed out that most of this data goes unused because our current processing systems are inefficient and remote. “Sending it all to the Cloud for real-time decision-making is simply not feasible,” he said. And the latency and energy costs make it impractical: “Our physical world is just too vast to be uploaded to the Cloud.”

Instead, Hinrichsen made the case for processing data right where it is generated: “We need intelligent data processing next to where the data is created, and that is the Edge – where AI meets the physical world.”

Why AI belongs at the Edge

Edge AI, according to Hinrichsen, is not just an optimisation strategy – it is essential for real-time, reliable, and secure systems. He described how Edge AI dramatically reduces the need for constant Cloud connectivity, a critical factor in remote or power-limited environments.

“In many use cases, real-time responsiveness is essential,” he said, citing examples like autonomous vehicles navigating traffic, smart watches detecting health anomalies, and smart cities adjusting traffic flows. “The Edge avoids round trips to the Cloud.”

There are environmental benefits, too: “A 1,000-second floating point calculation at the Edge consumes the same energy as sending a single bit to the Cloud.” Meaning that even a complex 16-minute calculation on a local device uses no more energy than sending a single bit of data to the Cloud.

Hinrichsen also spoke about the importance of security and trust in regards to AI. “Edge AI reduces the cybersecurity risk by processing the data right where it is generated, so the data are distributed into many Edge devices and not consolidated in one particular place in the Cloud, which wouldn’t make very attractive to hack into.”

Vision Zero, smart grids, and real-world impacts

Moving beyond theory, Hinrichsen then we on to highlight how Edge AI could address major global challenges. In energy, it could help manage a grid under pressure from increasing electrification. “We can’t just throw more power at the problem,” he said. AI can help to: “smartly balance the load … across city blocks, maybe beyond the city limits, maybe even on state level.”

In safety, Edge AI could very well play a crucial role in reducing deaths on roads, in workplaces, and in healthcare settings. Speaking about the millions of unnecessary fatalities that happen every year, he said that “It’s not a lack of data … it’s a lack of intelligence at the point of need,” meaning that with Edge AI, risks can be anticipated and prevented before they cause harm.

The AI evolution from perception to agency

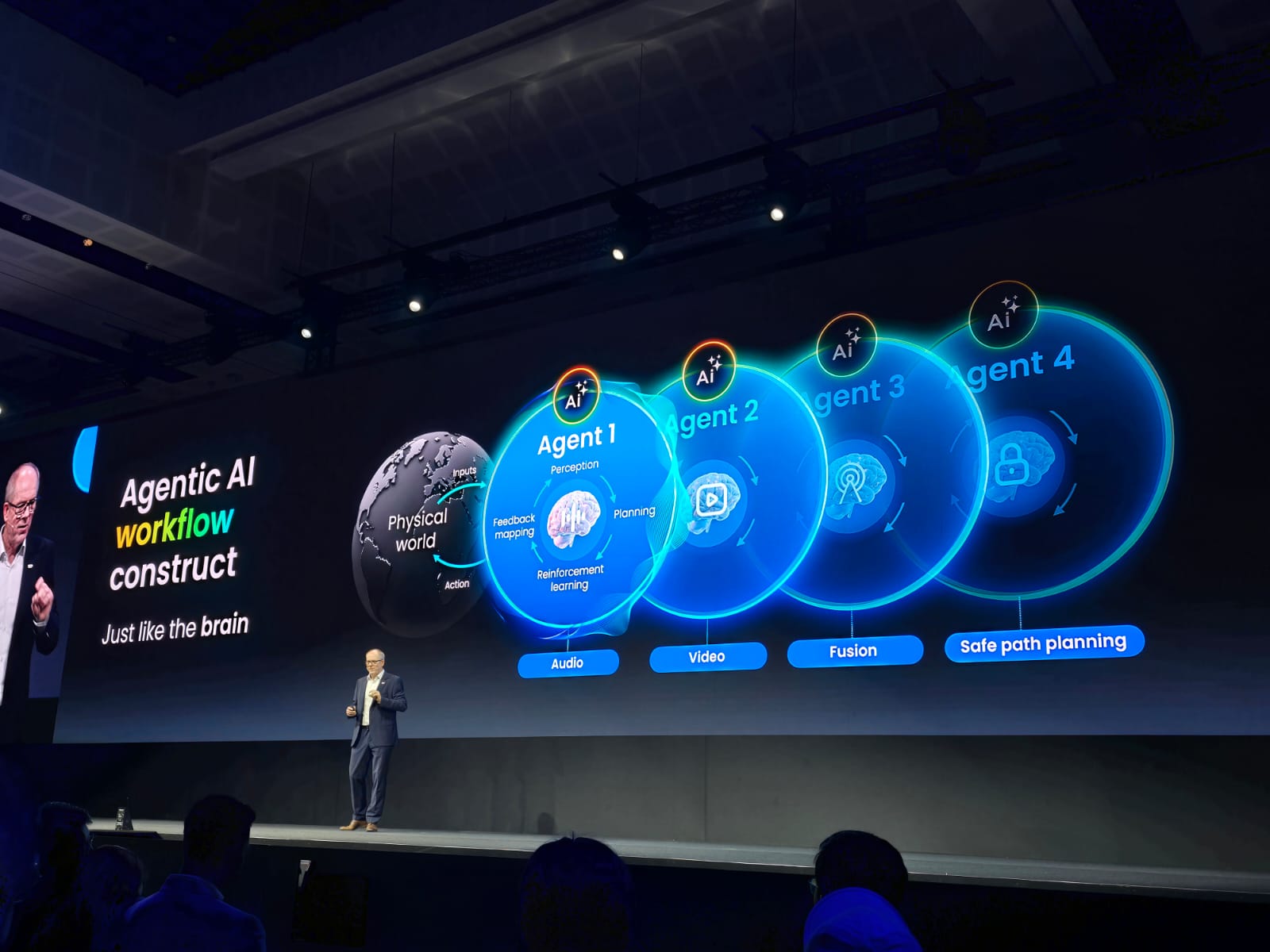

The heart of Hinrichsen’s keynote explored how AI has evolved – and how its trajectory is accelerating. He traced its development through three key phases: perception AI, generative AI, and now agentic AI.

“Perception AI gave us the aware Edge,” he said. This was the stage of object detection, facial recognition, and sensor fusion – initially developed in the Cloud and later ported to smaller devices.

Generative AI brought with it the interactive Edge, capable of natural language understanding and visual interpretation. The Gen AI models “became smaller and smaller, more energy efficient, just suitable for the edge,” he said.

But the next phase he covets as something more profound. “Agentic AI ties all the AI divisions of the past together to create an edge that has gone from reactive to proactive,” he said. Agentic systems can work autonomously, learning from experience, defining their own actions, and collaborating with other agents to meet goals.

In one example, Hinrichsen described a factory scenario where a burst water pipe is detected. AI agents – such as cameras and sensors – spot the leak. An “orchestrator” agent – akin to a project manager – triggers coordinated responses like shutting valves, alerting people, activating clean-up protocols. So the AI plans, acts, and learns from what went right or wrong. Just like a human would.

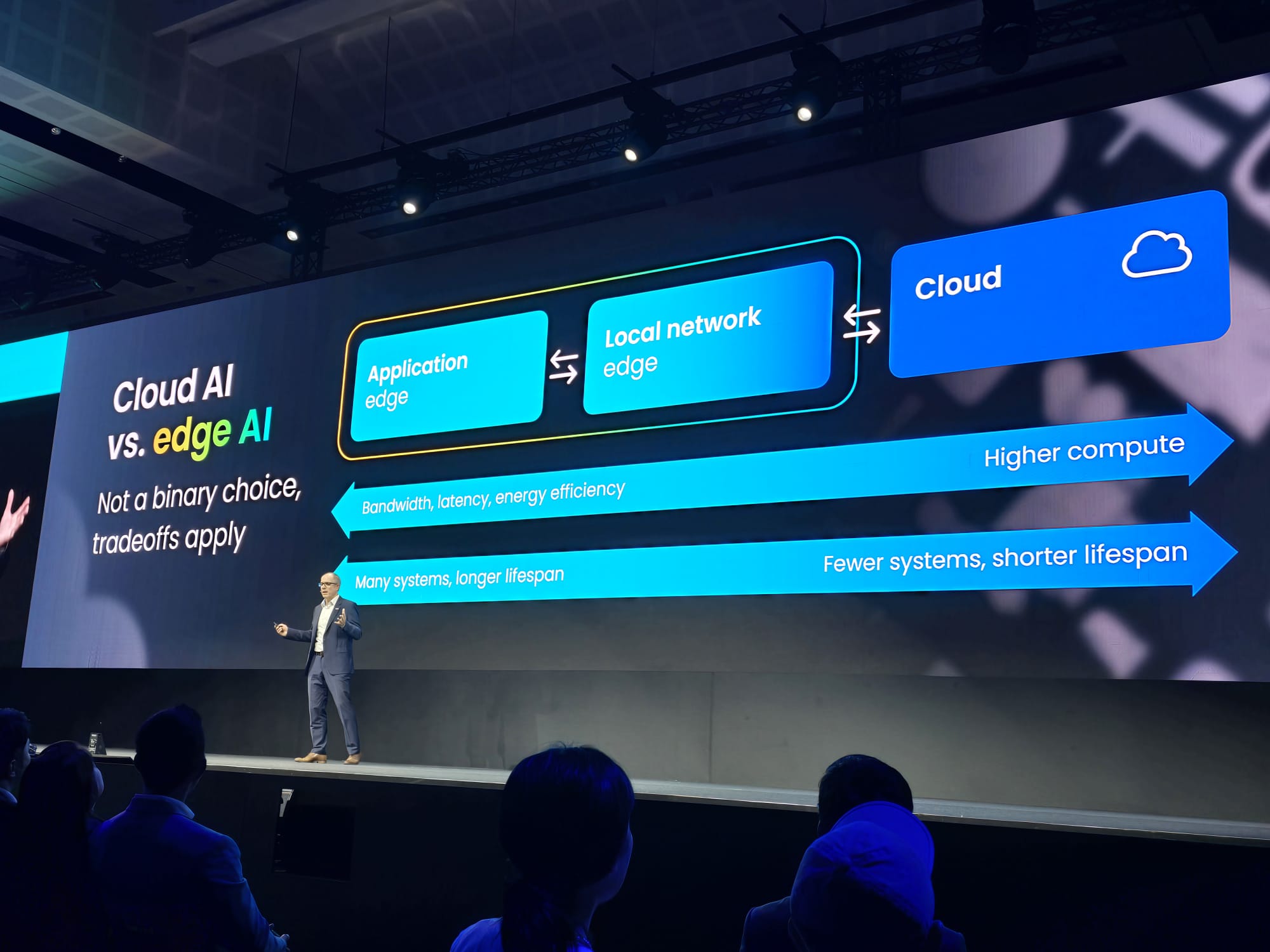

Edge and Cloud: not rivals, but partners

Despite championing Edge AI, Hinrichsen was clear that the Cloud is not going away. Instead, he sees a layered system emerging. He explained that you’ll have the Cloud, the Edge, and a ‘near Edge’ – which is local AI hubs that act as mini-clouds for more complex tasks.

This coexistence reflects the working reality that different applications will have different requirements around latency, energy use, bandwidth, and lifecycle. “It’s not a binary choice. The Cloud and Edge will always coexist.”

Building the autonomous Edge

The final third of Hinrichsen’s address tackled the engineering challenges of delivering agentic AI at scale. First, Edge systems require complete hardware and software integration – not just processing power, but sensing, networking, energy management, and security.

Most AI models developed for the Cloud are too large, too power-hungry for Edge use. “You cannot have water-cooled, dual-fan processors in an IoT device,” he said.

To solve this, he said that NXP provides a toolkit called eIQ, which helps developers right-size models and keep them up to date. “You can bring your model, you can bring your data, and then you can right size and optimise it for the particular cache,” he said.

Functional safety and security remain foundational, particularly as systems become more autonomous. Drawing on NXP’s background in automotive and secure hardware (such as passports and payment systems) to build in trust from the silicon up, Hinrichsen also spoke about how NXP is investing in its Edge AI future through acquisitions, including Kinara (for generative AI), Aviva Links (for high-bandwidth data transfer), and TTTech Auto (for safety-critical middleware).

A collaborative future

Hinrichsen concluded his keynote with a call to action: “Enabling the autonomous Edge is bigger than any one company. It requires a collective effort.”

He described a growing ecosystem of OEMs, system integrators, Cloud providers, and developers working to make autonomy scalable and safe.

“In a world that is becoming more connected and more automated, trust will be the cornerstone of everything we do,” he said.