Framing data centres as the new “AI factories,” Huang highlighted NVIDIA’s leadership in full-stack computing and unveiled a series of new products and architectural updates.

AI factories and the rise of accelerated computing

Huang described the global transition underway: “Every country, every region, needs to own more computers. But not just any computer – accelerated computing. And not just accelerated computing – AI factories.” These AI factories, he said, are the next phase of computing infrastructure – transforming data into intelligence at scale.

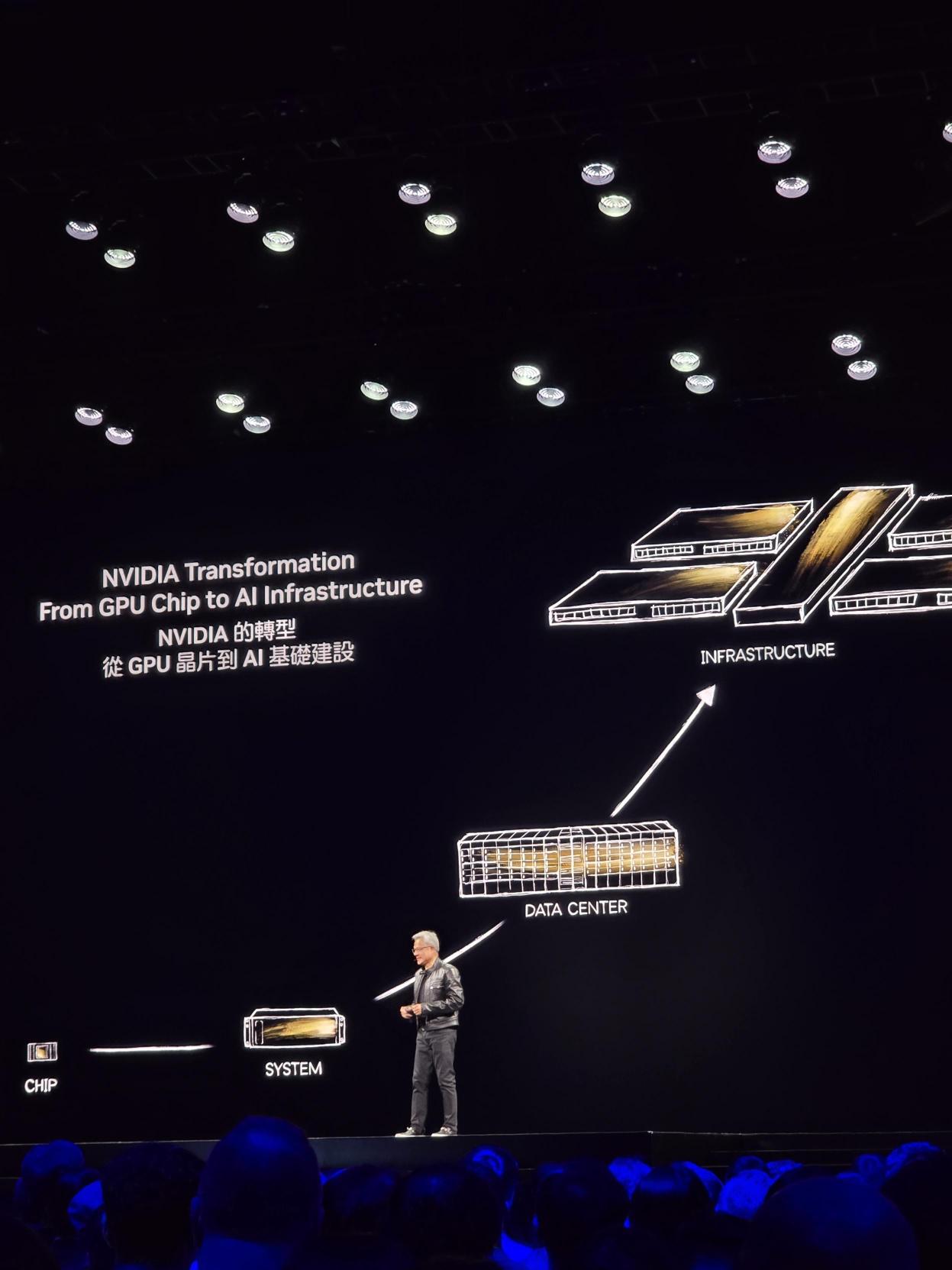

From GPU innovator to AI infrastructure provider

Huang opened his keynote with a reflection on NVIDIA’s 30-year journey, which has been shaped closely with Taiwan’s electronics ecosystem: “We started out as a chip company with a goal of creating a new computing platform.” That goal took a transformative turn in 2006 with the launch of CUDA – a parallel computing platform that allowed GPUs to be used for general-purpose computation.

Then in 2016, NVIDIA released its first AI-focused computing system, the DGX-1, which Huang said at the time was widely misunderstood: “No one understood what I was talking about and nobody gave me a PO.” The only taker was OpenAI – to whom NVIDIA donated the first unit – marking a symbolic start to what would become the deep learning revolution.

AI, Huang explained, doesn’t work like traditional software: “Whereas many applications ran on a few processors in a large data centre … this new type of application requires many processors working together.” That shift gave rise to a fundamentally different data centre design – one that NVIDIA now calls the “AI factory.”

“The data centre is the computer”

Acquiring Mellanox in 2019, Huang described how that gave NVIDIA the networking expertise it needed to treat the data centre as a single computational entity. “The data centre is a unit of computing. No longer just a PC. No longer just a server,” he said.

The architecture that underpins this shift – east-west data traffic, GPU scaling, and smart networking – now defines the company’s product roadmap.

He emphasised the shift from traditional CPU-based architectures to GPU-driven acceleration as essential for both performance and energy efficiency. Huang cited the exponential cost and power benefits of accelerated computing, noting: “By speeding up your computing, you speed up the time-to-solution. But you also save power, you save cost.”

He likened this moment to the arrival of electricity or the Internet – calling it the rise of an “intelligence infrastructure.” Just like factories were built to generate electricity or manage data, the new infrastructure needs to generate intelligence. “These AI data centres are improperly described,” Huang argued. “They are, in fact, AI factories.”

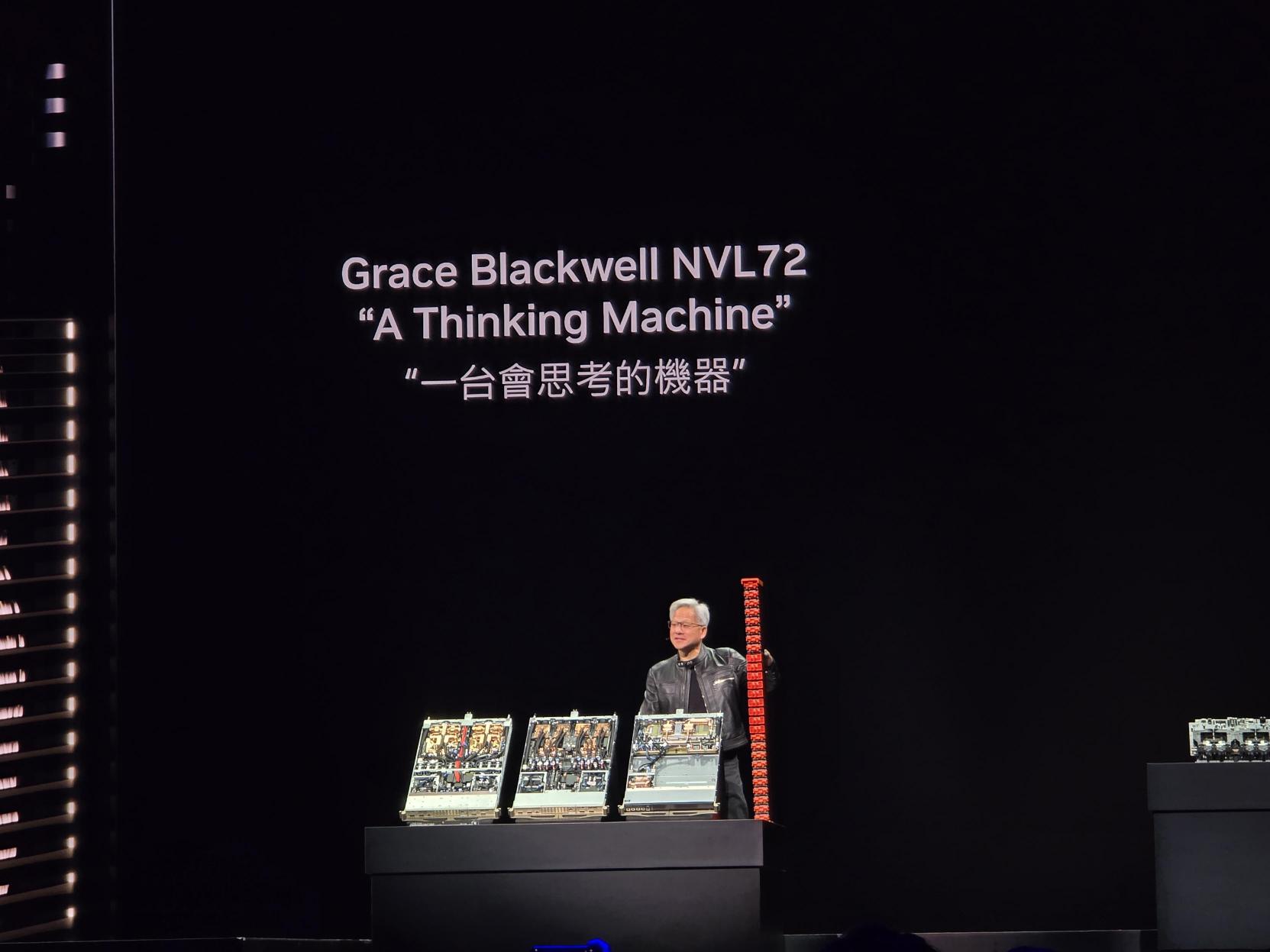

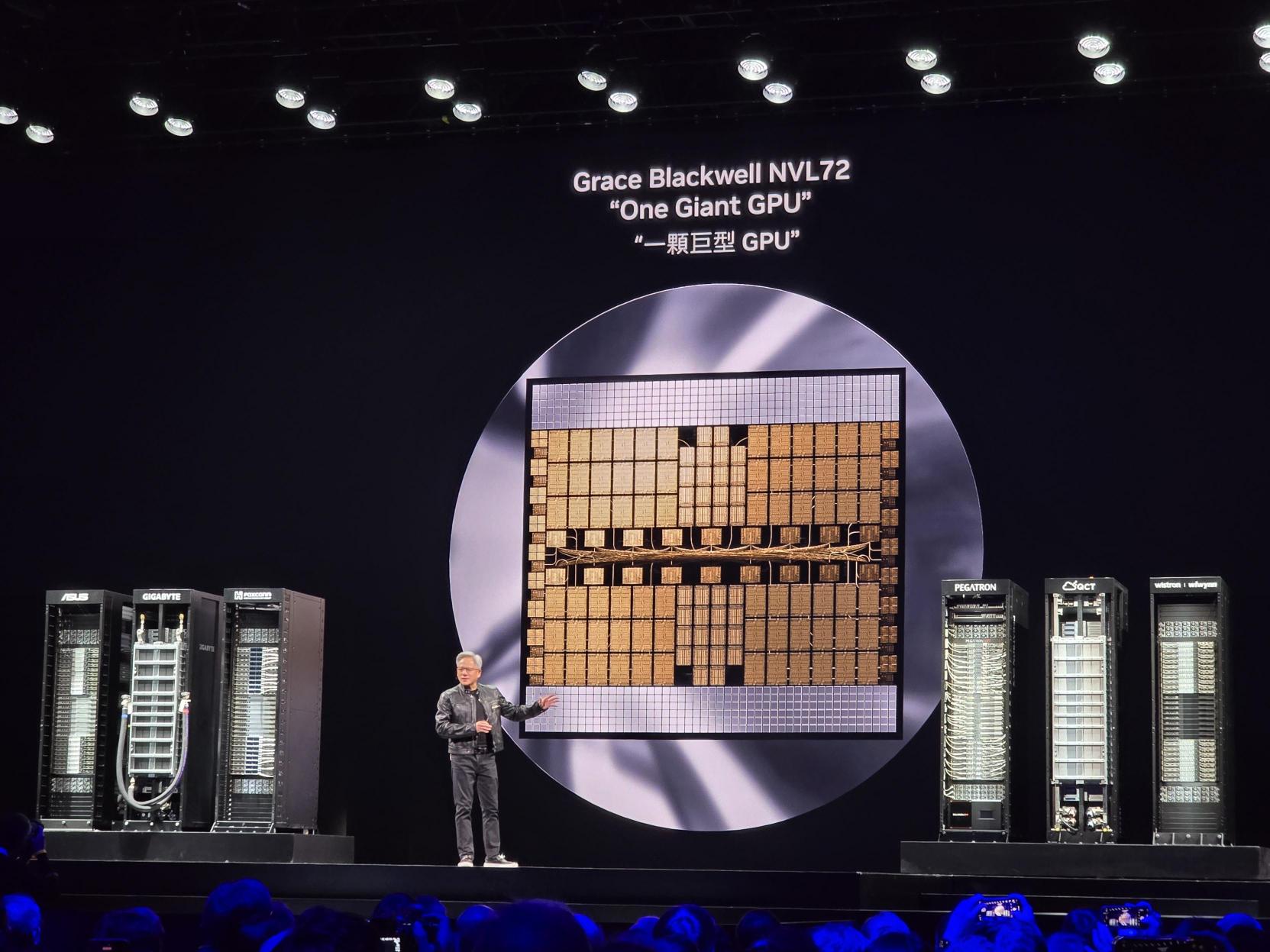

Blackwell platform and GB200 Grace Blackwell Superchip

The keynote featured the official unveiling of the GB200 NVL72, a liquid-cooled, rack-scale system based on NVIDIA’s new Blackwell architecture, purpose-built for training trillion-parameter models. The system delivers 30X faster performance than its predecessor, while being 25X more power efficient.

Each GB200 NVL72 includes:

- 72 Blackwell GPUs across 36 Grace Blackwell Superchips

- 600Tb/s NVLink switch fabric

- 720GB of high-bandwidth memory

The modular system is intended as a building block for AI factories, designed to scale efficiently and integrate with existing data centre infrastructure.

Described as a “giant GPU”, it is designed to operate as a single computing entity: “This one node replaces that entire supercomputer. A 4,000 times increase in performance in six years – that is extreme Moore’s Law.”

Next-generation Rubin platform roadmap

Huang also previewed Rubin, NVIDIA’s next GPU platform scheduled for 2026, along with its successor, Rubin Ultra, in 2027. The roadmap includes new CPUs (Vera and Vera Ultra) and updated networking (CX9 SuperNIC and X1600 InfiniBand). He positioned Rubin as the foundation for future-scale training and inference workloads, enabling faster deployment of large models and AI services.

Explaining why NVIDIA reveals its roadmap in such detail, he said: “No company in history – surely no technology company – has ever revealed a roadmap five years out. But we realised we’re no longer just a technology company. We are an essential infrastructure company.”

Software and Cloud platform innovations

NVIDIA announced major updates to its software platforms:

- NIM (NVIDIA Inference Microservices): a new framework to simplify deployment of AI models as scalable microservices, pre-optimised for NVIDIA’s AI stack

- Expanded support for enterprise generative AI workloads through NVIDIA AI Enterprise

- Partnerships with major Cloud service providers to deploy Blackwell and NIM frameworks globally

CUDA remains central to the company’s strategy, serving as the foundation for its growing ecosystem of domain-specific libraries. “Libraries are at the core of everything we do. It’s what started it all,” said Huang. These include cuLitho (for computational lithography), cuQuantum (for quantum-classical simulation), cuOpt (for optimisation tasks), and domain-specific stacks for genomics, signal processing, weather forecasting, and robotics.

AI for robotics and digital humans

The keynote also explored how NVIDIA’s platforms are shaping embodied AI:

- Project GR00T (Generalist Robot 00 Technology): a foundation model for humanoid robotics, trained using NVIDIA’s Isaac Lab and deployed through Jetson Thor and Isaac ROS 3.0

- Updates to NVIDIA’s Omniverse platform for digital twins and industrial simulation, including generative tools for 3D asset creation and real-time rendering using RTX technology

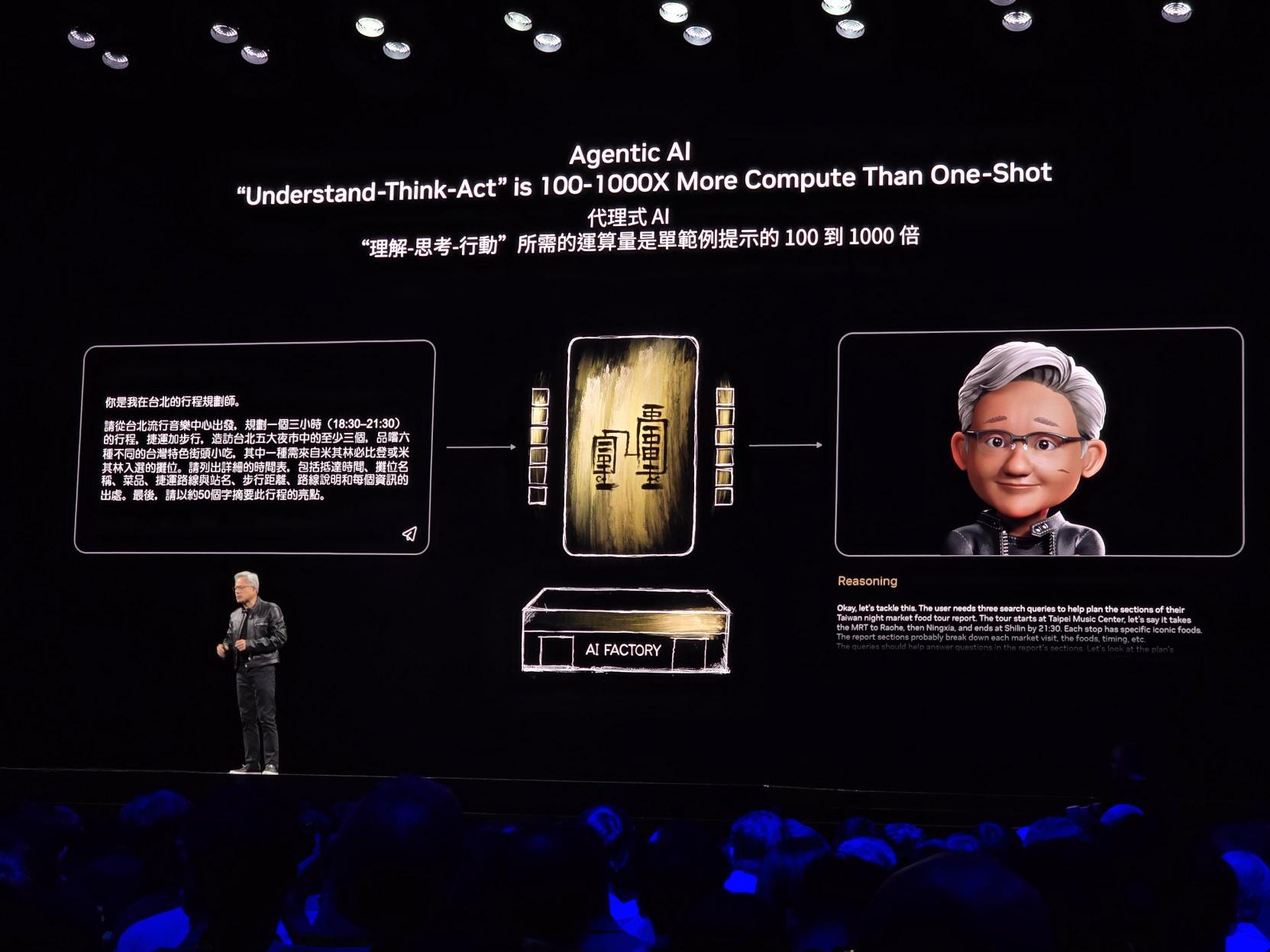

He described how agentic AI – digital agents that understand, reason, and act – are becoming tools for enterprise automation. “Everything we do today in IT can now have a digital counterpart,” he said. “A digital customer service rep. A digital supply chain manager. A digital chip designer.”

AI for the enterprise: DGX Spark, RTX Pro, and beyond

To bring AI into traditional enterprise environments, NVIDIA introduced:

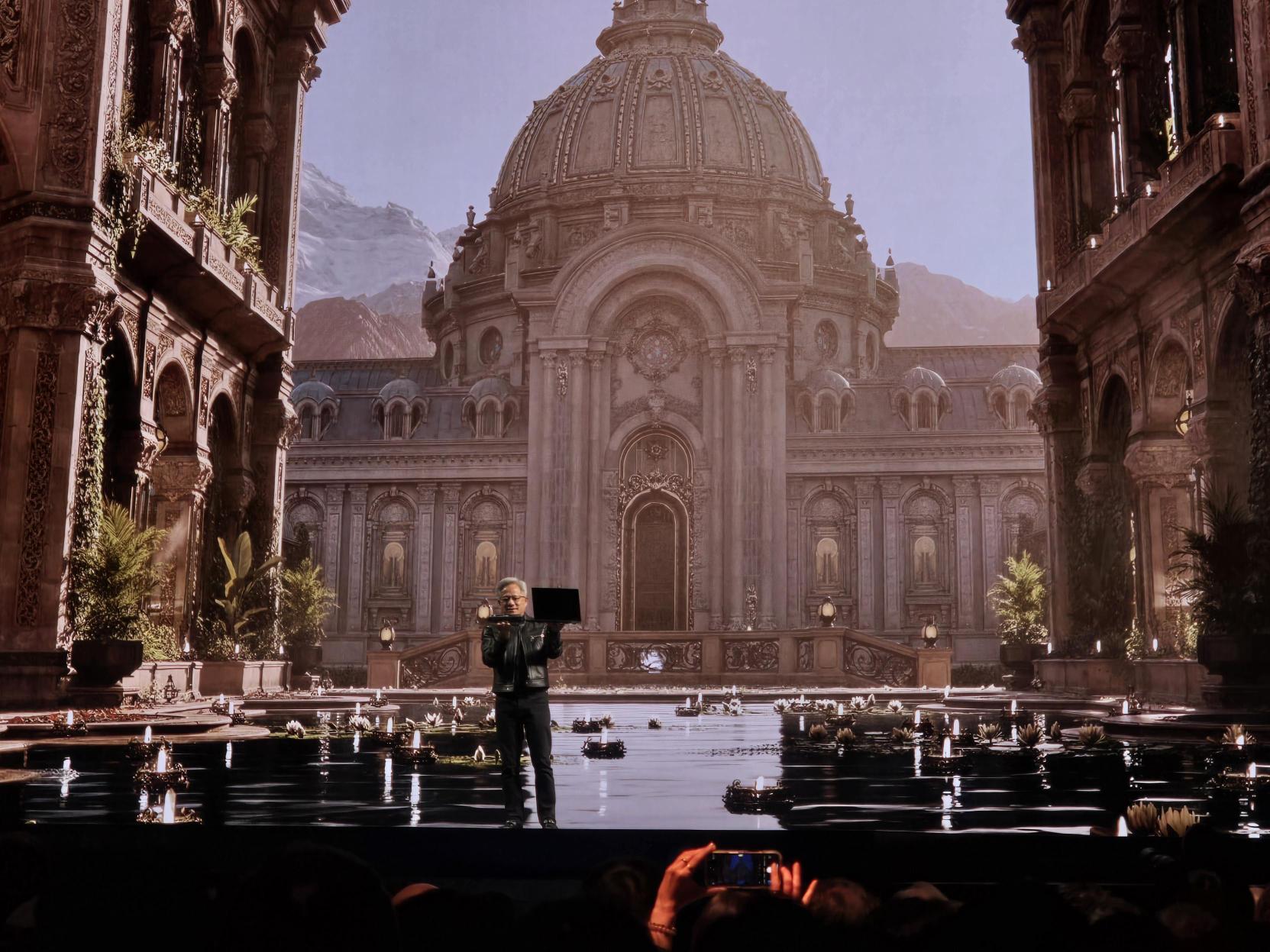

- DGX Spark: a compact, personal AI development workstation for researchers, offering 1 PFLOPS performance

- RTX Pro Server: an enterprise-grade system supporting both x86 workloads and AI agents, with support for Kubernetes, hypervisors, and virtual desktops

“These systems don’t need to run Windows. They don’t need to run legacy IT workloads. They’re AI-native,” said Huang. But they are also flexible enough to integrate into existing enterprise environments.

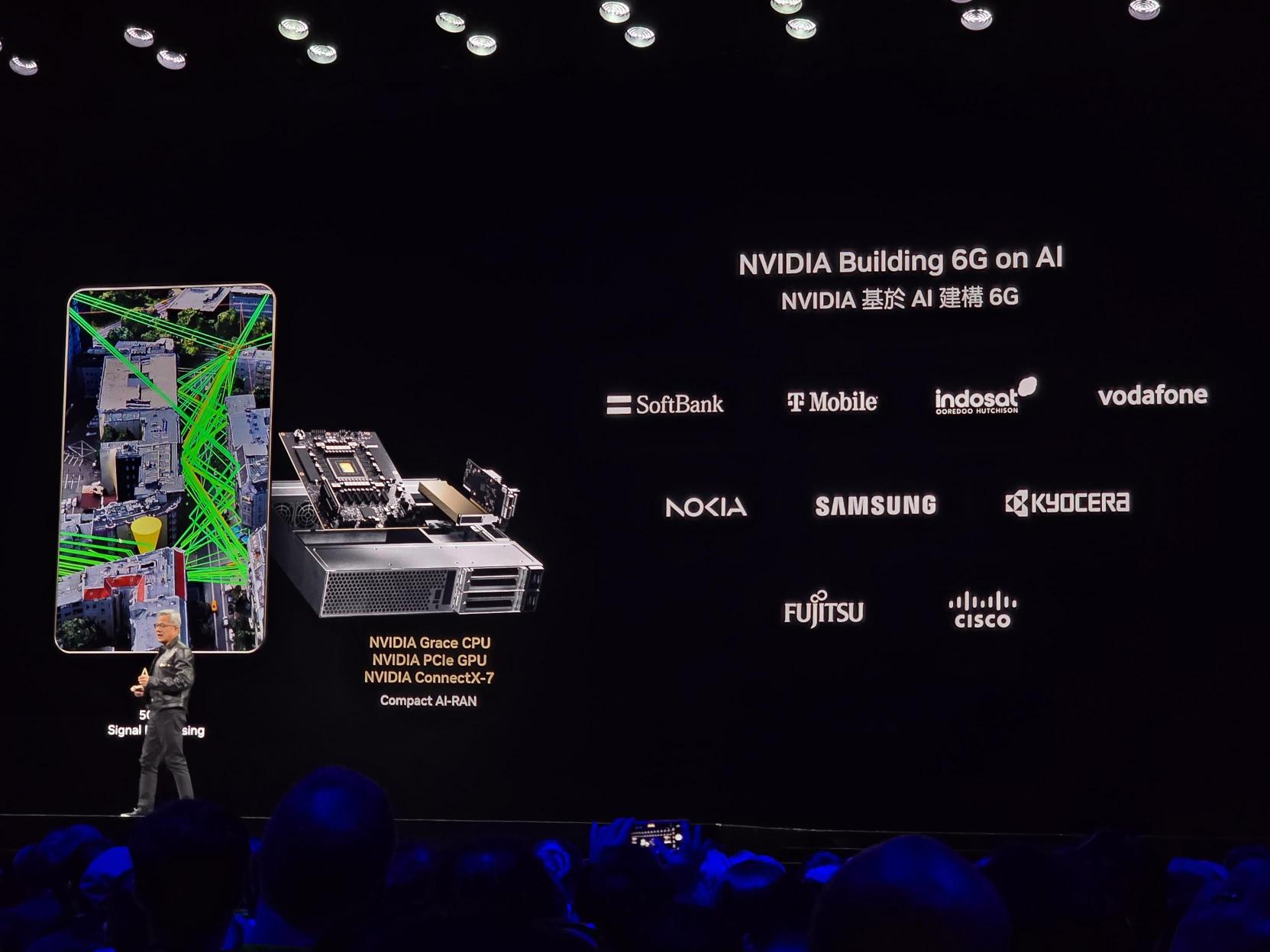

Global partnerships and ecosystem growth

Huang highlighted collaboration with Taiwanese manufacturers, praising their role in bringing NVIDIA’s vision to life: “Taiwan builds the world’s computers. And now, Taiwan builds AI factories.”

He also announced a new initiative: a national-scale AI supercomputer for Taiwan, co-developed with TSMC, Foxconn, and the Taiwanese government. “Every student, researcher, startup – everyone – in Taiwan will benefit,” he said.

To further expand infrastructure flexibility, Huang introduced NVLink Fusion, a system architecture that allows semi-custom CPUs and accelerators to connect to NVIDIA GPUs through NVLink. “Whether you want to build it all with NVIDIA, or mix and match – it’s now possible,” he said.

The age of intelligent infrastructure

Huang concluded with a message of scale and opportunity. “At the beginning, I said GeForce brought AI to the world. Now AI has come back to transform GeForce – and everything else.”

He announced NVIDIA’s plans to build a new flagship office in Taiwan – NVIDIA Constellation – as a physical anchor for its growing footprint in Asia. “It’s time we build a home as big as our ambitions.”

Looking ahead, he stressed the historic scale of the opportunity: “This time, we’re not just creating the next generation of IT. We are creating a whole new industry. A trillion-dollar industry of AI factories, digital agents, and robotics.”

COMPUTEX is currently taking place in Taipei, Taiwan, 20-23rd May with the theme of ‘AI Next’. Cristiano Amon, President and CEO of Qualcomm also delivered a keynote speech at COMPUTEX 2025 discussing how AI is expected to shape the future of computing, drawing on the adoption of its system-on-chips (SoCs) Snapdragon and the advent of AI PCs.

See the full keynote here: