And until that day comes, we can expect to interact more and more with machines engaged in learning, and for those interactions to go through an awkward immaturity as we adapt to a world where machine’s exercise their increasing agency.

Counter-intuitively, the automotive industry with its safety and security sensitive context is the most active and visible proving ground we have for how society will interact with these technologies – technologies that will be developed and implemented by corporations and likely regulated by government agencies. A wrinkle worth calling attention to is that government agencies with a responsibility to certify Artificial Intelligence implementations or Machine Learning capabilities are unlikely to know how to initially.

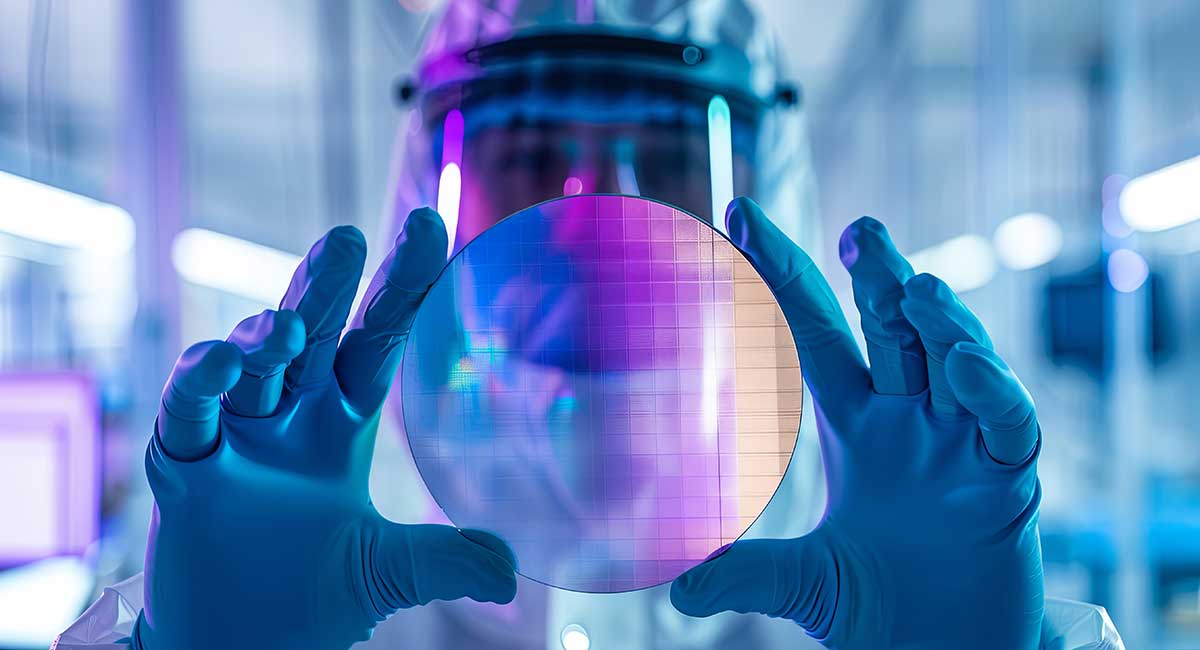

Last month there was a demo of a machine learning UI developed in labs. With a fair degree of accuracy and in almost real time, it identified dangerous situations and objects. This system has the potential to reduce false negative errors (dangerous situations or objects that don’t get identified by humans). By reducing such human errors, it has the potential to save lives.

In any deployment scenario where such a technology would be useful, life and death actions are likely to be taken in response to the probability that the machine assigns. Which brings up the question of what probability would be considered high enough to warrant taking life and death action and who would be responsible for that action.

In the case of autonomous vehicles, the hypothetical scenario that determined who was responsible for the actions of an autonomous machine was a fender bender in which the Google car was responsible for the accident. The responsible individual is Google the corporation. We now understand that fender benders should be the least of our concerns.

There’s been a news cycle’s worth of think pieces generated on the parameters that will govern an autonomous vehicles’ decision making in life and death situations. If you’re convinced you have a handle on such scenarios, it is encouraged you to head over to MIT’s Moral Machine.

Having a human in the loop compounds complexity. When humans interact with machines that have some level of AI or that may change their behaviour based on machine learning, they will need to know what to expect and they will need to understand a given system’s limitations and tendencies.

This is not something we will experience one day in a far-off future.

McCabe explained: “There is a point during my commute at which my car will sometimes take control of the wheel. It does so at a complex moment: after I’ve passed through a toll gate, with traffic sometimes merging from the right, and with a barrier on the left-hand side of my lane as it narrows and leads on to a bridge. That my car only sometimes intervenes is an example of the complexity I’m describing.”

“I can’t be sure that it will, and I can’t be sure that it won’t.”

Commercial software companies developing either ‘off the shelf’ machine intelligence, the capacity for that intelligence to evolve through machine learning, or for environments where that intelligence can execute control functions based on that intelligence will confront new requirements for reliability with profound implications for liability. These requirements will increase as intelligent machines become more prevalent in society and as businesses come to depend increasingly on systems distinguished by AI and the capacity to learn.

As always, safety and security will need to be top of mind as these systems are deployed into the field of operation. The most recent reminder being the WannaCry cyber-attack which struck diverse connected embedded systems — traffic cameras, ticket kiosks, ATMs.

Artificial intelligence and machine learning are uncovering a whole new world that we must be prepared for. Wind River for one are very curious to see how it continues to unfold, and enjoy having a front row seat working so involved in advancing these technologies.

Courtesy of Wind River.