In the last decade, data has become the driving force behind almost all technology, as well as some of the most lucrative industries in the world, and the incorporation of artificial intelligence into data acquisition, in the form of smart vision, is helping this field to grow even faster.

Evan Leal, Director of Product Marketing, Boards & Kits at Xilinx, commented about the incorporation of AI into smart vision: “Embracing AI in vision systems has really led to a boom in traditional vision systems, meaning they have become more complex and developers are spending more time on those traditional systems, as it’s driven new use cases all together.”

In order to try and address these new use cases, and to address the expanded functionality AI and smart vision has brought to the traditional use cases, companies have been racing to deliver what Leal calls ‘point solutions’.

On the back of this, the vision market is becoming both diverse and fragmented, as vision can now be used in many ways to solve so many problems and enable a variety of technologies, such as sensor fusion, evolving AI, image recognition, AR, security, facial recognition, and medical analysis.

Leal explained: “It’s left the vision market pretty fragmented with a lot of custom and often not very scalable algorithms and approaches, each of which might do really well for its own point use case, but isn’t very scalable.”

Adding to this growing complexity and division in the vision space, is the growing number of AI specific challenges at the edge. AI scientists are continuing to develop high accuracy models, but a lot of the time, these developers are working in a space of pure science, and not worrying about realistic constraints, such as thermal and power hardware problems. But eventually these models need to be turned into a deployable end product.

“This is where those developers tend to find themselves in a bit of a pickle,” Leal continued. “They have to work out how they can build an edge-based product that can operate in a realistic size and in power constrained environments, that can also adapt to the latest models in the face of the changing networks and requirements. And it turns out developers can handle all those market needs very well today.”

So what can be done in the smart vision space to help developers take their vision AI models, and turn them into deployable end products? There are three main things the market needs to focus on to tackle this problem.

1) The vision market needs prebuilt hardware and software platforms to enable faster time to deployment.

Currently there is a drought of these prebuilt platforms, meaning that developers are building new ones every time they create a new end product. This is not only time consuming and expensive, but also is part of the reason why the technology lacks any kind of cohesion, part of what has made it such a confusing space.

2) Products need to be flexible in order to create innovative and differentiated vision-based solutions.

By making products flexible, there will be less fragmentation in the industry. If an existing, prebuilt platform can be adapted to work for another model, as well as the one it was originally built for, then the need to keep building and rebuilding these platforms should die down, and allow smart vision to evolve even faster than it is currently.

3) Developers need a way to tap into the latest AI models, while also being able to manage all the constraints at the edge, such as power and cost.

Juggling reaching the dizzying heights of new vision AI science, and then squeezing it down into a system-on-module PCB is presenting a huge, time consuming challenge, and one that needs to be tackled as soon as possible to facilitate the growing demand for smart vision products.

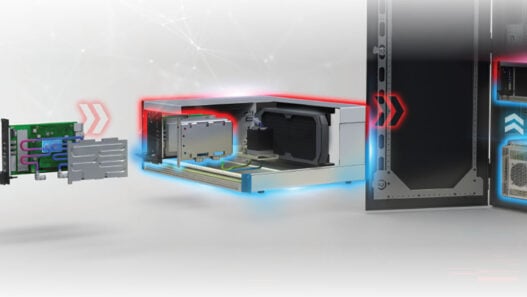

As one company trying to tackle this problem, Xilinx has introduced the KRIA adaptive SOM for edge applications. Leal expanded on what KRIA can do: “We wanted to look at how Xilinx could empower developers to create their innovative ideas, and specifically looked for a solution that would address the problems in vision AI.

“KRIA SOMs harness the traditional advantages of Xilinx, but delivered as a system-on-module form factor, and these are production deployable SOMs. They’re adaptive, and they enable developers and edge-based applications to speed through their design phase.”

To learn more about the Xilinx KRIA solution, click here.