AI, crucial to the concept of autonomous driving, is advancing at an astonishing rate. Yet just as important as the ‘brain’ of the vehicle, are the eyes, the thing that feeds the AI information for it to make a decision on.

Enter GMSL2 cameras and GMSL protocol. “The GMSL protocol ensures robust and reliable data transmission between these cameras and the processing unit,” Maharajan Veerabahu, Co-founder and VP of e-con Systems tells Electronic Specifier. Through a collaboration with Minus Zero, e-con Systems showcased their expertise with the Zpod – India’s first fully autonomous vehicle and scalable to Level 5 autonomous capabilities – by equipping it with GMSL2 HDR NVIDIA Jetson AGX Orin cameras and industry-standard GMSL protocol. This integration saw reduced processing time, which increases the capability of precise navigation through ever-changing road environments.

GMSL2 cameras and GMSL protocol

How the GMSL2 cameras and GMSL protocol brought this to the Zpod is by changing the quality in which vital information is sent from sensor to processor. Historically, Ethernet cameras were the go-to choice for automotive applications, but they fell short in areas like data compression and frame rate consistency, especially when multiple cameras were involved.

The GMSL protocol addresses these shortcomings by allowing for higher bandwidth and uncompressed data transmission, ensuring no detail is lost in the image processing stage. This is done through an asymmetric, full duplex SerDes technology – which means that it transports data at a high rate in the downlink (or forward) direction, while simultaneously transporting a lower data rate in the uplink (or reverse) direction. It transports power, bidirectional control data, Ethernet, bidirectional audio and multiple streams of unidirectional video simultaneously over a single coaxial cable or shielded twisted pair cable. Due to processes like this, GMSL is able to dedicate bandwidth for each camera, eliminating concerns about a drop in the frame rate, and allow cameras to operate synchronously to provide a 360° surround view of the surroundings.

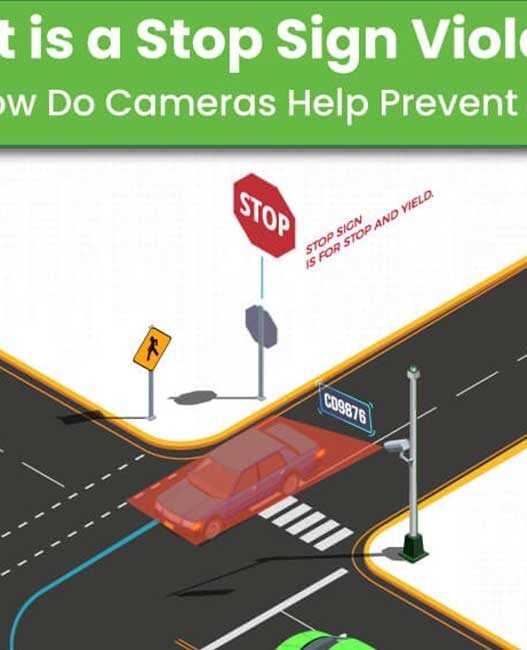

Equally, the GMSL2 camera that works with the protocol enables higher bandwidth, facilitating 1080p streaming at 30fps in an uncompressed YUV format preserves crucial details in the scenario, even when supporting long-distance data transmission of up to 15 meters, and with low latency streaming. “This allows the AI algorithm to more effectively able to detect objects, pedestrians, traffic signs/signals, etc for autonomous navigation,” says Veerabahu. From bright sunlight to shadowed areas – the GMSL2 provides clear images for the AI algorithms to detect and respond to objects, pedestrians, and traffic signals effectively.

This enhanced feed plus higher bandwidth allows for more and better information to be processed faster than before and give the software that works with it a better chance at getting it right. “This low latency is critical for time-sensitive applications in autonomous navigation, ensuring minimal delay in the vehicle’s receipt and processing of information,” explains Veerabahu. E-con Systems then uses this information obtained and runs it into its Image Signal Processing software – which uses things like Auto Exposure, Auto White Balance, and HDR processing to adapt to diverse lighting conditions, making it more digestible for the Zpod by tuning images to meet specific system requirements and environmental conditions. Pair it with a FAKRA connector supported in the camera systems and the connectivity is not only improved, but rugged enough to withstand the vibrations from the vehicles.

Yet, it’s this tuning of the software that can prove a large challenge. Teaching the system to know the optimal dynamic range in a situation with two or more elements of vastly differing light, particularly in scenarios where visibility is paramount, such as facing headlamps from oncoming traffic, is still one that even the highest spec sensors face difficulty in.

What it means for autonomous vehicles

Despite the Zpod having different shape, dimensions and even speed capability from other autonomous vehicles, the success of the Zpod has broader implications for the future of autonomous vehicle technology and beyond: “The growing landscape of autonomous applications, such as delivery robots and agricultural vehicles, shows the potential for broader usage of GMSL cameras and ISP software, indicating a promising future for these technologies in various sectors,” concludes Veerabahu.

The integration of GMSL2 cameras and the GMSL protocol can not only enhance the safety and efficiency of these vehicles but also open new avenues for innovation across various sectors. With an increasing emphasis on safety testing and functional safety in camera systems, as evidenced by Cruise’s incidents in California, developments in areas like auto-calibration post-installation and advancements in image sensors to improve visibility under challenging conditions are beginning to be seen as just as vital to autonomous vehicles as the processing of the images themselves.