Systems like automated parking and traffic-jam assist make journeys more comfortable, while others such as forward collision warning (FCW), advanced emergency braking (AEB) and advanced emergency steering (AES) can help avert road-traffic accidents.

The sensor fusion concept is widely acknowledged as an enabler of ADAS within the automotive sector. A driver of this is the amount of innovation in vehicle-based systems like radar, lidar, and camera technology, to name a few. These systems and the huge volumes of data they produce must be brought together to provide a single source of truth. Arguably, the description of sensor fusion is an oversimplification. Building a complete system that communicates with the driver and interacts with the vehicle involves more than sensors and systems talking to each other. It is more helpful to view this process as data aggregation through processing and distribution. Ultimately, ADAS requires all the signals captured from sensors on the vehicle to be pre-processed, shared with a single processing architecture, and aggregated to create actionable outputs and commands.

This article by Wayne Lyons, Director, Automotive, AMD will discuss how data for ADAS is aggregated through processing and distribution, as well as the role of silicon architecture and adaptive computing in achieving this.

Multi-sensor ADAS

ADAS systems rely extensively on sensors such as radar, lidar, and cameras for situational awareness. While 77GHz radar provides object detection and motion tracking, lidar is used for high-resolution mapping and perception – and hence generates many data points per second. Video cameras, for their part, are mounted in various parts of the vehicle and continuously generate multiple channels of high-resolution image data to provide forward, rear, and surround views. A wide variety of additional sensors are often used, including trackers of temperature, moisture, speed, GPS, and accelerometers, depending on the features the system is intended to provide.

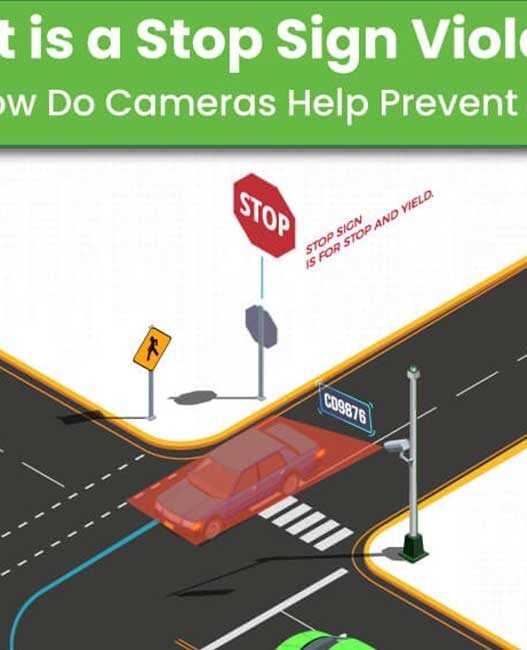

Focusing on the camera signals, as an example, image processing is applied at the pixel level to enhance image quality and extract object information. Features like surround view depend on further video processing with graphic overlays. On the other hand, collision avoidance systems process sensor data to characterise the environment around the vehicle, such as identifying or tracking lane markings, signs, other vehicles, or pedestrians. Systems such as lane-keeping assist and autonomous braking or steering, need to detect threats and subsequently may generate a warning or take over control of the vehicle to help avoid an accident.

When implementing ADAS, a typical approach is to use a combination of customized parallel hardware to process sensor data, hosted in an FPGA or ASIC, while characterising the environment and making appropriate decisions are typically performed in software. The software tasks may be partitioned between a DSP for object processing connected to characterising the environment, and a microprocessor for decision-level processing and vehicle communication.

AMD automotive grade (XA) Zynq UltraScale+ MPSoCs integrate a 64-bit quad-core ARM Cortex-A53 and dual-core ARM Cortex-R5 based processing system and AMD programmable logic UltraScale™ architecture in a single device. With an MPSoC like this it’s possible, for example, to integrate a complete ADAS imaging flow from sensing through environmental characterisation in a single device. Leveraging the various processing engines available, and the chip’s high-bandwidth internal interfaces, designers can partition the processing workloads optimally between hardware and software. DSP workloads can be handled either in hardware, using DSP slices, or in software using the ARM Cortex-R5 processor.

This flexibility lets developers optimise the design to help eliminate data flow bottlenecks, maximise performance, and provide efficient utilisation of device resources. MPSoC integration also enables savings in BOM costs and power consumption. Saving power is especially valuable going forward, as extra ADAS is mandated, more sophisticated infotainment is desired, and electric vehicles depend on efficient use of battery energy to maximise driving range. Along with power efficiency, the chief concern for ADAS engineers is minimising latency. The need for low latency to deliver high levels of both functional safety and driver experience – as well as low power consumption requirements – makes ADAS applications inextricably bound to the capability of the silicon hardware.

Single-chip strategy

One example of a system created using this single-chip approach is Aisin’s next-generation Automated Park Assist (APA) system that combines signals from four surround view cameras and 12 ultrasonic sensors. By also using high-accuracy machine learning models, this APA can understand and react to the dynamic environment around the vehicle. It can support autonomous control of the steering, brakes, transmission, and speed, to deliver a smooth hands-free parking experience.

It’s worth noting that the AMD suite of functional safety developer tools help development teams target ISO 26262 ASIL-B certification. Also, the AMD Zynq UltraScale+ is fully certified for use in functional safety applications, in accordance with ISO 26262, IEC 61508, and ISO 13849 up to ASIL B/SC2. This means AMD offers automotive T1 suppliers and OEMs a portfolio of automotive-grade FPGAs and adaptive SoCs, which deliver the high reliability and low latency required by ADAS on the same 16nm silicon.

In concept, ADAS combines information from various sources to improve perception and decision-making. Utilising multiple sources of data lets the system derive richer information that can enhance the safety and performance of the driver assistance features. This provides the foundation for the system’s ability to detect and track objects, identify potential hazards, and make informed decisions like adaptive cruise control, lane-keeping assistance, and automatic emergency braking. Techniques like ‘early fusion’ are also gaining interest and the next generation AMD 7nm Versal AI Edge family combines the DAPD capabilities of its 16nm silicon with AI accelerations to combine sensors and supplement the synchronized data stream with object lists and other information.

Efficient aggregation and processing of multiple streams of sensor data has a critical role in enabling more reliable and sophisticated autonomous driving capabilities. By leveraging flexibility to partition the data processing workloads optimally between hardware and software, with the added flexibility of configurable hardware accelerators in logic such as DSP slices, designers can maximise system performance while also reducing BOM costs and power consumption.