Artificial intelligence (AI) accelerators are gaining traction in high-performance electronics design, driven by the need for efficiency and hardware-level control in AI workloads.

Traditional graphics processing units (GPUs) no longer meet the latency or power efficiency demands of Edge deployments or real-time industrial applications. UK-based startups serve as innovation hubs in this environment, with engineers now looking to domain-specific silicon and tightly integrated software stacks to meet those requirements.

AI accelerators and modern chip design

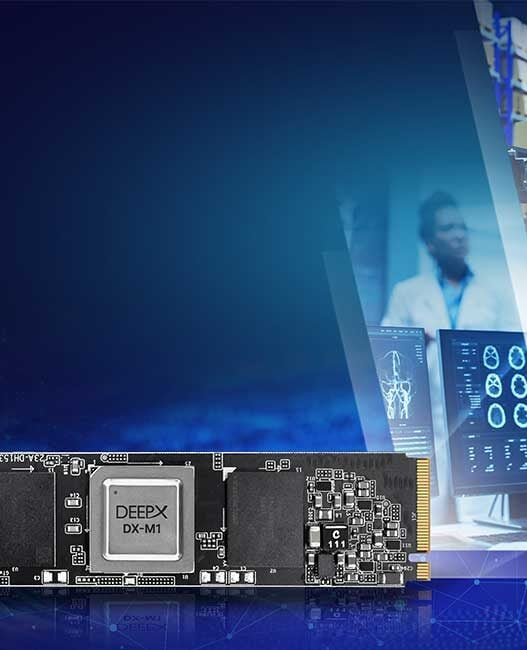

Deeptech ventures often emphasise efficient memory hierarchies and custom dataflows tailored for transformer models or multi-sensor inputs. For electronics engineering teams, these accelerators introduce new design options that move away from generic platforms and toward specialised, tightly scoped performance advantages.

Central processing units and general-purpose GPUs often fall short in latency-sensitive applications, where real-time decision-making and small form factors are critical. Their architectures prioritise flexibility and throughput, not determinism or efficiency at the Edge. In contrast, AI accelerators are purpose-built for inference and specialised compute tasks. They can use 10 times less power and occupy 10 to 100 times less space than leading general-purpose solutions.

Metrics like predictable memory access patterns and low-latency execution paths now matter more than theoretical peak performance. AI accelerators often employ optimised memory hierarchies and minimise data movement, a major source of power drain in conventional chips. These accelerators open new doors to efficiency and speed without the traditional trade-offs.

Startups shaping the AI accelerator environment

The AI accelerator environment evolves as startups challenge established compute models with purpose-built solutions. These companies focus on determinism and workload-specific performance, creating new alternatives to general-purpose platforms.

Graphcore

Graphcore challenges GPU-centric compute models. Its Intelligence processing unit architecture focuses on fine-grained parallelism and large on-chip memory, which reduces data movement and improves efficiency for complex machine learning workloads. Beyond hardware, its influence extends through its software and ecosystem contributions. The company developed the Poplar software stack to support tight hardware-software co-design, which enables developers to map models efficiently across thousands of parallel compute tiles.

Fractile

Fractile rethinks the relationship between compute and memory. Its in-memory compute architecture places processing directly alongside storage, which sharply reduces data movement, a major source of latency and power consumption in conventional accelerators. The company has drawn industry attention after emerging with backing from the NATO Innovation Fund and Oxford Science Enterprises. Fractile’s performance targets have helped push industry discussion toward new accelerator paradigms optimised specifically for inference rather than general-purpose compute.

Technical trends driving new accelerator designs

Domain-specific architectures map silicon directly to known computation patterns, which reduces instruction overhead and limits unnecessary data movement. As a result, latency drops, and efficiency improves. In medical research, deep learning AI deployed on specialised hardware has demonstrated the ability to identify Alzheimer’s disease with an average classification accuracy of 99.95%, which highlights the impact of purpose-built acceleration.

At the architectural level, RISC-V adoption continues to grow due to its openness and flexibility. Custom instruction set architecture extensions allow engineers to accelerate matrix operations and memory access patterns common in AI workloads. Optimised toolchains ensure models map cleanly to custom datapaths, enabling predictable performance and faster paths from prototype to deployment.

Accelerator use cases relevant to electronics firms

Edge inference continues to expand across industrial sensing and machine vision applications where response times and power efficiency matter. By running machine learning tasks directly on devices, Edge AI allows accelerators to operate at the Edge rather than relying on Cloud processing. This approach reduces latency and supports consistent performance in environments with limited connectivity.

For many AI-driven systems, local inference also improves reliability by keeping critical decisions close to the data source. Robotics workloads place greater demands on determinism and real-time execution. Dedicated accelerators help meet these requirements by delivering predictable performance under strict power and thermal limits.

The strategic shift toward specialised AI compute

UK startups drive meaningful innovation in AI acceleration by delivering silicon tailored for specific workloads. These advances create growing opportunities for engineers to adopt differentiated compute solutions that align with power and system-level constraints. As a result, accelerator selection is becoming a core architectural decision rather than a simple add-on to existing designs.

About the author:

Jack Shaw is the Senior Editor of Modded, with more than seven years of experience covering technology, manufacturing, supply chain, and industry trends. Jack has reported on innovations in automotive systems, manufacturing processes, and tech‑driven workplace solutions, sharpening complex technical topics into clear, actionable insight. His writing draws on that cross‑industry lens to explore how electronic components and emerging manufacturing techniques translate into real‑world applications and business value.