AI is reshaping industries, economies, and the way we interact with technology. But behind the scenes, the infrastructure powering this revolution is under strain. Data centres are hitting power consumption ceilings, while bandwidth demands are growing exponentially. Traditional silicon photonics are reaching their limits and can no longer efficiently solve the power and connectivity challenges facing AI data centres.

I spoke to Brad Booth, CEO of NLM Photonics about power hungry AI data centres and how NLM are working towards a sustainable solution.

Booth explained: “AI workloads are moving so much data that connectivity has become the bottleneck; whether that be connectivity between the GPU and the memory, or the GPU and the network.”

In the early days of data networking, engineers increased power to overcome transmission limits. The same trend has repeated itself in photonics.

“The traditional photonic platforms are reaching their limits, and we’re solving the problem with power. The crux of the problem is that AI data centres cannot afford to burn that extra power,” said Booth.

The power crisis in AI infrastructure

AI workloads are power-hungry. AI training and inference tasks require high-performance computing clusters that combine CPUs with dense configurations of GPUs and TPUs running continuously. Global data centre electricity use is projected to hit 536TWh in 2025 and could double by 2030 – equivalent to the annual electricity use of Germany. However, cooling these massive facilities also adds to the problem as they can sometimes consume up to 40% of the total power consumption.

“Data centres are struggling to add the compute they need within a power envelope that won’t destabilise the grid,” Booth said. “In AI-focused facilities, networking hardware accounts for 20-40% of total power consumption.” However, the more power directed towards photonics, the less remains for compute. “That then requires either more data centres or bigger data centres to be built,” he added. “As an industry, we have to do better.”

Why silicon photonics are falling short

Silicon photonics have served well for years, but they now struggle to keep up with AI-scale data movement. Thermal instability, increasing drive voltages, and fabrication costs are all limiting factors. The traditional approach of using more power to push more data is no longer viable, environmentally or economically.

As the limits of silicon become more apparent, researchers are exploring new materials and hybrid designs to keep pace with AI’s demand for speed and efficiency.

A hybrid solution

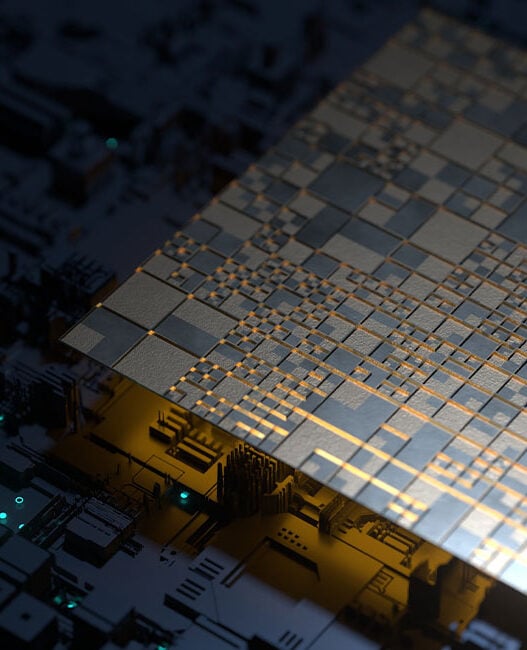

One potential solution to lessening the power burden in AI data centres is rethinking the materials used for photonic connectivity. NLM’s research and development is exploring silicon-organic hybrid (SOH) technology, which blends conventional silicon with organic electro-optic (OEO) materials to achieve greater data transmission efficiency at lower power levels.

OEO materials offer advantages over traditional inorganic counterparts, with stronger electro-optic responsivity and the ability to operate with lower drive voltages. They can also be integrated into existing silicon manufacturing processes, meaning the shift towards hybrid photonics does not necessarily require new fabrication infrastructure.

Talking about how NLM’s technology helps, Booth explained: “NLM’s technology provides a path for traditional silicon photonics, and any photonics platform, to scale beyond its limitations while reducing power. More bandwidth, less power; it’s our raison d’être.”

Testing hybrid photonic integrated circuits (PICs) has demonstrated data transmission speeds exceeding 200G per channel with record modulation efficiencies and low voltage requirements, suggesting that such designs could scale to 400G or even 800G per wavelength.

Booth said: “Testing performed by Keysight Technologies at our lab verified 224Gbps data transmission with exceptionally low voltage driver requirements on the 1.6T PIC. The best single channel has a world-record SOH modulation efficiency of 0.31V-mm, which is 10 to 15 times better than traditional silicon.”

These results indicate that hybrid approaches could help reduce one of the largest sources of power draw in AI-focused facilities – the photonic interfaces responsible for high-speed data movement between processors and memory.

Because organics are added after standard silicon processing, hybrid designs can scale to higher data rates without major redesigns or disruption to existing foundry infrastructure.

Cracking the thermal code

Organic materials once had a poor reputation for stability, degrading above 90°C. The breakthrough came when NLM’s team, building on decades of research at the University of Washington, developed thermoset polymers that “crosslink into a very dense lattice.” Booth said: “Our patented Selerion family of materials is proven stable above 120°C. Once the material is thermoset during our poling and crosslinking step, the electro-optic responsive chromophores are locked in place.”

This discovery addressed one of the biggest historical obstacles to organic materials – thermal reliability – and enabled their integration into commercial foundries.

Overcoming perception and proving reliability

Despite the advantages, Booth acknowledged that a lingering challenge is trust.

“People remember early polymers that weren’t reliable. Our job is to show, with data, that those days are over.”

NLM is working with independent third parties to verify its materials’ stability, bandwidth, and reliability. These partnerships, spread across the US, Europe, and Asia, underline the technology’s global readiness.

What’s next

For Booth the next step to reducing power consumption in AI data centres is to focus on scaling production.

“Our next step is working toward manufacturability at scale. We want to automate post-fab deposition, poling, and encapsulation so OEO materials can be added to existing foundry processes seamlessly,” he said.

The company also plans to migrate to Advanced Micro Foundry’s more advanced HP silicon photonics platform and explore next-generation materials promising even higher stability.

As AI infrastructure pushes physical limits, technologies such as SOH photonics could change the way data moves inside and between data centres. As AI continues to grow, innovations such as SOH photonics could prove essential to balancing performance with sustainability in next-generation data centres.