The importance of PowerVR GPUs

At this week’s I/O conference, Google announced Daydream - a platform for high performance mobile VR built on top of Android N with optimisations called Android VR Mode.

Imagination has expressed its excitement about Daydream bringing high performance VR to the Android ecosystem and has developed a few optimisations of its own for PowerVR Rogue GPUs, designed to provide a superior VR experience for Android users.

Right after the Oculus Rift Crescent Bay prototype was announced two years ago, the company’s Chief Scientist Michael Abrash presented five key ingredients for achieving presence in virtual reality environments. These five elements are tracking, latency, persistence, resolution and optics.

Presence is extremely important because it enables people to create an emotional connection to the worlds created by VR devices. Presence is defined as a person’s subjective sensation of being there in a scene depicted by a medium, usually virtual in nature. In a featured article on TechCrunch titled, ‘First rule of VR: don’t break the presence’, Sasa Marinkovic also acknowledged the importance of maintaining presence in VR applications, commenting: “Achieving a truly lifelike user experience with VR technology is now possible because of tremendous advancements in computer processing power, graphics, video and display technologies. However, the magic ingredient is not only achieving but also maintaining the reality of the virtual reality presence. We call this rule ‘Don’t break the presence’.”

Out of the five key ingredients presented above, there are two that are directly related to graphics processors - latency and resolution - and Imagination has been working on some solutions for developers targeting PowerVR GPUs.

These techniques are valid for both desktop and mobile VR but are particularly relevant for the latter since they ensure more efficient utilisation of the limited resources (memory bandwidth, thermal headroom, display resolution etc.) available in mobile and embedded devices.

Reducing motion to photons latency

In the case of VR, latency directly influences presence. If the rendered images are not perfectly synchronised to the user’s head movements, the feeling of presence is instantly lost. Therefore, it is extremely important to avoid latency at all costs.

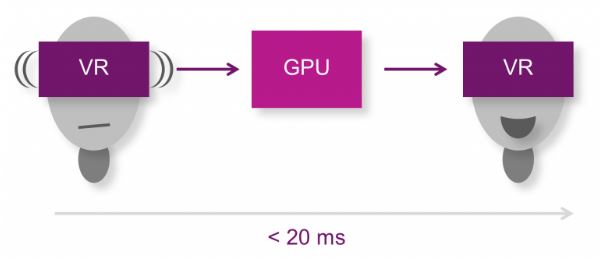

Latency is measured as the time between user input (e.g. head movement) to the corresponding image update. To create a full sense of immersion, this time interval needs to be lower than 20ms.

Thankfully, most GPUs feature a multi-threading, multi-core architecture that is optimally designed to handle latency. However, that doesn’t mean the job on latency is done - there are additional algorithms that developers can implement to reduce latency.

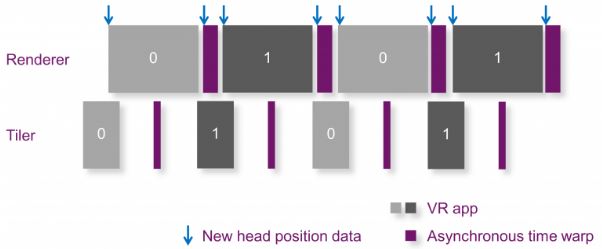

One example is asynchronous time warping which is designed to significantly reduce the perceived motion to photon latency. There are some caveats though - although asynchronous time warping does reduce latency, it can also decrease the overall quality of the resulting distorted images. This is because warping just moves the image around in 3D space, but doesn’t really create a different view of the new position. In addition, any animations in the scene will have to stop which may pose problems.

The diagram below present the asynchronous time warping sequence in greater detail - once the current scene is rendered, we look at the head position data and then warp the image based on the tracking information.

A more advanced technique for reducing latency is frontbuffer strip rendering as explained by Christian Potzsch from Imagination’s PowerVR Graphics team. “Oculus recommends that all headsets should implement correct stereoscopic rendering at a minimum resolution of 1,000 x 1,000 pixels per eye (this is in addition to the final output render to the smartphone display). In the case of mobile VR, driving a 2K display at 60fps (or higher) puts a lot of pressure on the GPU and can have a significant impact on the battery life and power consumption.

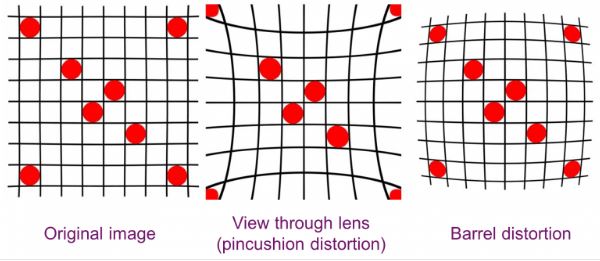

There are several ways to address this challenge. For example, the optimisation detailed below takes into account the way in which light propagates through the lens system. The position of the lens increases the field of view to be much larger than the actual surface provided by the screen in the VR headset. In addition to giving a wider field of view, the lenses also allow users to comfortably look at a screen that is very close to their eyes. However, the lens introduces a pincushion distortion that needs to be corrected by the GPU using a corresponding barrel distortion.

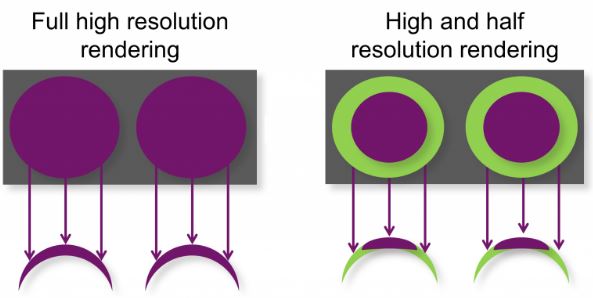

We can take advantage of the fact that when applying the barrel distortion below, the edges of the image are distorted heavily while the centre is barely affected. As so many texels are squished together in these heavily distorted regions, developers can get away with rendering fewer texels for those regions in the first place. If implemented correctly, the user won’t notice the difference between this approach and rendering at full resolution, even if their eyes are focused on the edges of the lens.

The result in terms of GPU usage is a significant reduction in fragment overhead without a perceived loss of quality for the user.