Calculating the accuracy of a battery fuel gauge

A battery fuel gauge obtains data from the battery to determine how much juice is left. Do not misconstrue gauging accuracy for measurement accuracy. A gauge’s ability to accurately report state of charge (SOC) and predict remaining battery capacity depends on measurements including voltage (‘V’), current (‘I’) and battery temperature (‘T’).

Measurement accuracy depends on gauge hardware, while gauging accuracy depends on the gauge’s algorithm robustness and measurement accuracy.

Battery gauging generally uses three predominant algorithms. The first employs a voltage look-up table, which is suitable for very light load applications. The second coulomb counts the passed charge from/into the battery (integrating ‘I’ with respect to time), which is a more reliable approach, but has the challenge of not knowing the initial SOC upon battery insertion. The third combines the first two approaches. The accuracy of these gauging methods increases with algorithm complexity, with the voltage look-up method being the least accurate.

Since the input variables into any algorithm are the measured values, their accuracy is critical. Accurate ‘V’ measurement is necessary for initial SOC estimates of a newly inserted battery. Accurate I measurement captures low sleep currents and short load spikes while properly Coulomb counting the passed charge. Accurate ‘T’ measurement helps properly compensate changes in calculated battery resistance and capacity caused by temperature changes.

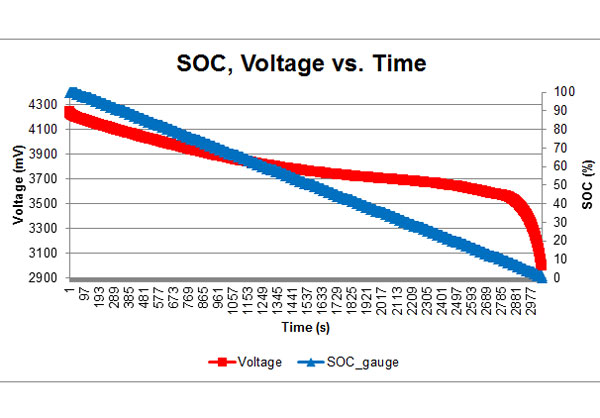

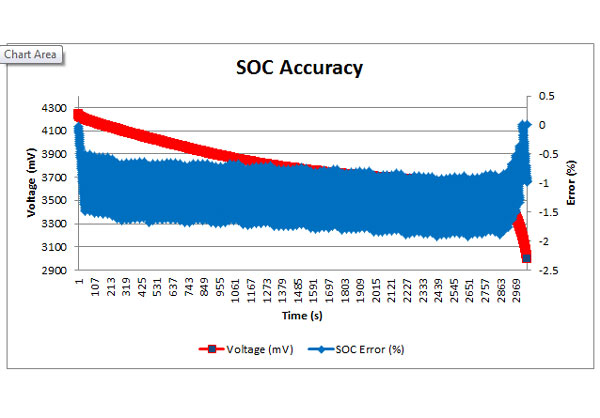

To determine gauging accuracy, start with a properly configured gauge and calibrate the ‘V’, ‘I’ and ‘T’ measurements. You’ll need to log these measurements at short periodic intervals for further processing. To evaluate gauging accuracy quickly, plot the voltage and SOC and confirm the SOC smoothly converges to 0% at the terminate voltage, as shown in Figure 1. This approach, although simple, is subjective and challenging to evaluate relative error magnitude.

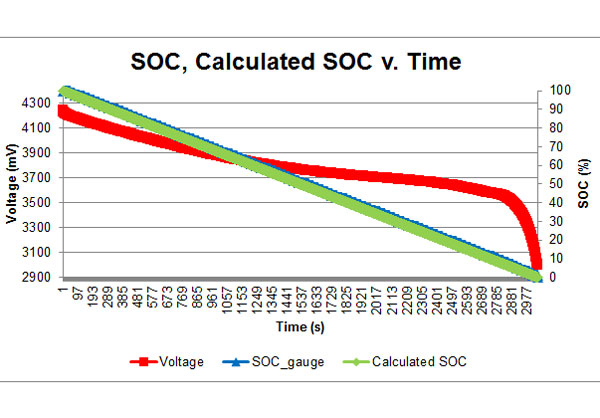

A more effective method computes gauge accuracy across the battery’s entire charge or discharge profile:

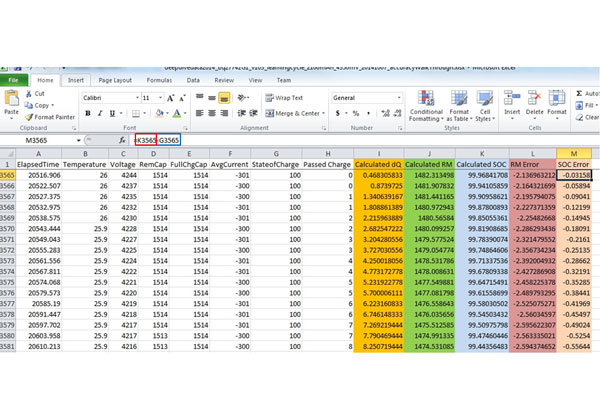

- Start with a gauge log in Excel of the ‘V’, ‘I’, ‘T’ and reported SOC.

- Calculate the passed charge (dQ) from full to empty by adding into a rolling sum of the product of current and the its time interval.

- Calculate the battery’s true full-charge capacity (FCC), which is the final result from point 2

- Calculate the battery’s remaining capacity (Calculated_RM) by subtracting the summed passed charge at each point from the FCC.

- Calculate the battery’s true SOC (Calculated_SOC) at each point by dividing the Calculated_RM by FCC and multiplying by 100.

- Calculate the gauge-reported SOC error at each point by subtracting the reported SOC from the calculated SOC.

Capacity and calculating the remaining capacity and SOC from that data for comparison against the gauge-reported SOC is the most effective way to determine gauging accuracy. Click here for more information on battery fuel gauges.

Author: Onyx Ahiakwo is an applications engineer for TI Battery Management.